Welcome to Semarchy xDM.

This guide contains information about using Semarchy to deploy and manage models and applications.

Preface

Overview

Using this guide, you will learn how to:

- Manage the lifecycle and versions of the logical models and applications developed in Semarchy Application Builder.

- Deploy these models and applications for run-time.

- Manage the execution of the deployed models and applications

- Configure the data locations hosting these deployments.

Audience

Document Conventions

This document uses the following formatting conventions:

| Convention | Meaning |

|---|---|

boldface | Boldface type indicates graphical user interface elements associated with an action, or a product specific term or concept. |

italic | Italic type indicates special emphasis or placeholder variable that you need to provide. |

| Monospace type indicates code example, text or commands that you enter. |

Other Semarchy Resources

In addition to the product manuals, Semarchy provides other resources available on its web site: http://www.semarchy.com.

Obtaining Help

There are many ways to access the Semarchy Technical Support. You can call or email our global Technical Support Center (support@semarchy.com). For more information, see http://www.semarchy.com.

Feedback

We welcome your comments and suggestions on the quality and usefulness

of this documentation.

If you find any error or have any suggestion for improvement, please

mail support@semarchy.com and indicate the title of the documentation

along with the chapter, section, and page number, if available. Please

let us know if you want a reply.

Introduction to Semarchy xDM

Semarchy xDM is the Intelligent Data Hub platform for Master Data

Management (MDM), Reference Data Management (RDM), Application Data Management

(ADM), Data Quality, and Data Governance.

It provides all the features for data

quality, data validation, data matching, de-duplication, data authoring,

workflows, and more.

Semarchy xDM brings extreme agility for defining and implementing data management applications and releasing them to production. The platform can be used as the target deployment point for all the data in the enterprise or in conjunction with existing data hubs to contribute to data transparency and quality.

Its powerful and intuitive environment covers all use cases for setting up a successful data governance strategy.

Introduction to Application Management

Application management consists in:

- Managing models, which includes their lifecycle, versions, export and import.

- Managing model deployments into data locations.

- Managing and monitoring job executions.

- Managing the configuration of the data locations.

Application management tasks are performed in the Semarchy Application Builder, mainly using the Management perspective.

Introduction to the Semarchy Application Builder

Connecting

To access Semarchy Application Builder, you need an URL, a user name and password that have been provided by your Semarchy xDM administrator.

To log in to the Semarchy Application Builder:

- Open your web browser and connect to the URL provided to you by your administrator. For example

http://<host>:<port>/semarchy/where<host>and<port>represent the name and port of host running the Semarchy xDM application. The Login Form appears. - Enter your user name and password.

- Click Log In. The Semarchy xDM Welcome page opens.

- Click the Application Builder button. The Semarchy xDM Application Builder opens on the Model Design view.

To log out of the Semarchy Application Builder:

- In the Semarchy Application Builder User Menu, select Log Out.

The Application Builder

The Semarchy Application Builder is the graphical interface used by Semarchy xDM model designers and managers. This user interface exposes information in

panels called Views and Editors .

A given layout of views and editors is called a Perspective .

The following sections describe the components of the Application Builder user interface.

Perspectives

There are two perspectives in Semarchy Application Builder:

- Model Design: this perspective is used to edit or view a model edition.

- Management: this perspective is used to:

- Create and manage data locations, and deploy model editions in these locations.

- Manage model editions and branches.

- Manage Job executions.

You switch from one perspective to another by clicking the Design or Management button in the Application Builder header.

Tree Views

When a perspective opens, a tree view showing the objects you can work with in this view appears on the left hand-side of the screen.

In this tree view you can:

- Expand and collapse nodes to access child objects.

- Double-click a node to open the object’s editor.

- Right-click a node to access all possible operations with this object.

Outline

Certain perspectives includes an Outline view that appears on the left hand-side of the screen.

This view shows the object currently opened in the editor (and all its child objects) in a tree view.

You can use the same expand, double-click, right-click actions in the outline as in the tree view.

Editors

An object currently being viewed or edited appears in an editor in the central part of the screen.

You can have multiple editors opened at the same time, each editor appearing with a different tab.

Editor Organization

Editors are organized as follows:

- The editor has a local toolbar which is used for editor specific operations. For example, refreshing or saving the content of an editor is performed from the toolbar.

- The editor has a breadcrumb that allows navigating up in the hierarchy of objects.

- The editor has a sidebar which shows the various sections and child objects attached to an editor. You can click in this sidebar to jump to one of these sections.

- Properties in the editors are organized into groups, which can be expanded or collapsed.

Saving an Editor

When the object in an editor is modified, the editor tab is displayed with a star in the tab name. For example, Contact* indicates that the content of the Contact editor has been modified and needs to be saved.

To save an editor, either:

- Click the Save button in the editor toolbar.

- Use the CTRL+S key combination.

You can also use the Save All button in the tree view toolbar, or press SHIFT+CTRL+S to save all modified editors.

Closing an Editor

To close an editor:

- Click the Close (Cross) icon on the editor’s tab.

- Use the Close option in the editor’s context menu (right-click on the editor’s tab).

You can also use the Close All option in the editor’s context menu (right-click on the editor’s tab) to close all open editors .

Accelerating Editing with CamelCase

In the editors and dialogs in Semarchy Application Builder, the Auto Fill

checkbox accelerates object creation and editing.

When this option is checked and the object name is entered using

CamelCase, the object Label as well as the Physical Name is

automatically generated.

For example, when creating an entity, if you type ProductPart in the

name, the label is automatically filled in with Product Part and the

physical name is set to PRODUCT_PART.

Deleting Objects

The Semarchy xDM model is designed to maintain its consistency.

When deleting an object (for example using the Delete context menu action) that is referenced by other objects, an alert Unable to Delete Object appears.

This dialog indicates which references prevent the object deletion. Remove these references before deleting this object.

Working with Other Views

Other views (for example: Progress, Validation Report) appear in certain perspectives. These views are perspective-dependent.

You can open or re-open a view using the Show View… User Menu item.

User Preferences

User preferences are available to configure the Application Builder behavior.

Setting the Preferences

Use the Preferences item in the User Menu to open the preference dialog page.

The following preferences are available in the Preferences dialog:

- General Preferences:

- Exit Confirmation prompt the user to confirm when leaving the Application Builder.

- Date Format: Format used to display the date values in the Application Builder. This format uses Java’s Simple Date Format patterns.

- DateTime Format: Format used to display the date and time values in the Application Builder. This format uses Java’s Simple Date Format patterns.

- Link Perspective to Active Editor: Select this option to automatically switch to the perspective related to an editor when selecting this editor.

Exporting and Importing User Preferences

Sharing preferences between users is performed using preferences import/export.

To export user preferences:

- Select the Export item in the User Menu. The Export wizard opens.

- Select Export Preferences in the Export Destination and then click Next.

- Click the Download Preferences File link to download the preferences to your file system.

- Click Finish to close the wizard.

To import user preferences:

- Select the Import item in the User Menu. The Import wizard opens.

- Select Import Preferences in the Import Source and then click Next.

- Click the Open button to select an export file.

- Click OK in the Import Preferences Dialog.

- Click Finish to close the wizard.

Importing preferences replaces all the current user’s preferences by those stored in the preferences file.

Models Management

Semarchy xDM supports out of the box metadata versioning.

When working with a model in the Semarchy Application Builder, the developer works on a Model Edition (version of the model).

Model management includes all the operations required to manage the versions of the models.

Introduction to Model Management

Model Editions

Model Changes are developed, managed and deployed as Model Editions. This version control mechanism allows you to freeze versions (called Editions) of a model at design-time and deploy them for run-time in a Data Location.

A data location always runs a given model edition. This means that this data location contains data organized according to the model structure in the given edition, and that golden data in this data location is processed and certified according to the rules of the given edition.

Model editions are identified by a version number. This version number format is <branch>.<edition>. The branch and model numbers start at zero and are automatically incremented as you create new branches or editions.

The first edition of a model in the first branch has the version [0.0]. The fourth edition of the CustomerAndFinancialMDM model in the second branch has version number 1.3, and is typically referred to as CustomerAndFinancialMDM [1.3].

Open and Closed Model Editions

A Model Edition is at a given point of time either Open or Closed

for editing.

Branching allows you to maintain two or more parallel Branches (lines)

of model editions.

- An Open Model Edition can be modified, and is considered as being designed. When a milestone is reached and the design is considered complete, the model can be closed. When a model edition is closed, a new model edition is created and opened. This new model edition contains the same content as the closed edition.

- A Closed Model Edition can no longer be modified and cannot be reopened on the same branch. You can only edit this closed edition by reopening it on a different branch.

- When a model from a previously closed edition needs to be reopened for editing (for example for maintenance purposes), a new branch based on this old edition can be created and a first edition on this branch is opened. This edition contains the same content as the closed edition it originates from.

Actions on Model Editions

Model Editions support the following actions:

- Creating a New Model creates the first branch and first edition for the model.

- Closing and Creating a New Edition of the model freezes the model edition in its current state, and opens a new edition of the model for modification.

- Branching allows you to maintain several parallel branches of the model. You create a branch based on an existing closed model edition when you want to fork the project from this edition, or create a maintenance branch.

- Deployment, to install or update a model edition in a data location.

- Export and Import model editions, to transfer them between repositories.

These tasks are explained and details in the next chapters of this guide.

Typical Model Lifecycle

A typical model lifecycle is described below.

- The project or model manager creates a new model and the first model edition in the first branch.

- Model and application designers edit the model metadata. They perform their design tasks, as explained in the in the Semarchy xDM Developer’s Guide.

- When the designers reach a level of completion in their implementation, they deploy the model edition for testing, and afterwards deploy it again while pursuing their implementation and tests. Such actions are typically performed in a development data location. Sample data can be submitted to the data location for integration in the hub.

- When the first project milestone is reached, the project or model manager:

- Closes and create a new model edition.

- Deploys the closed model edition or exports the model edition for deployment on a remote repository.

- The project can proceed to the next iteration (go to step 2).

- When needed, the project or model manager creates a new branch starting from a closed edition. This may be needed for example when a feature or fix needs to be backported to a close edition without taking all the changes done on later editions.

The following example shows the chronological evolution of a model through editions and branching:

- January: a new CustomerHub model is created with the first branch and the first edition. This is CustomerHub [0.0].

- March: The design of this model is complete. The CustomerHub [0.0] edition is closed and deployed. The new model edition automatically opened is CustomerHub [0.1].

- April: Minor fixes are completed on CustomerHub [0.1]. To deploy these to production, the model edition CustomerHub [0.1] is closed, deployed to production and a new edition CustomerHub [0.2] is created and is left open untouched for maintenance.

- May: A new project to extend the original hub starts. In order to develop the new project and maintain the hub deployed in production, a new branch with a first edition in this branch is created, based on CustomerHub [0.1] (closed). Two models are now opened: CustomerHub [0.2] which will be used for maintenance, and CustomerHub [1.0] into which new developments are added.

- June: Maintenance fixes need to take place on the hub deployed in production.CustomerHub [0.2] is modified, closed and sent to production. A new edition is created: Customer [0.3].

- August: Then new project completes. CustomerHub [1.0] is now ready for release and is closed before shipping. A new edition CustomerHub [1.1] is created and opened.

The following schema illustrates the timeline for the edition and branches. Note that the changes in the two branches are totally decoupled. Stars indicate changes made in the model editions:

Month : January March April May June August Branch 0 : [0.0] -***-> [0.1] -*-> [0.2] ----------*-> [0.3] -----------> Branch 1 : +-branching-> [1.0] -**-**-****-> [1.1] --->

At that stage, two editions on two different branches remain and are open: CustomerHub [1.1] and CustomerHub [0.3].

Considerations for Models Editions Management

The following points should be taken into account when managing the model editions lifecycle:

- No Model Edition Deletion: It is not possible to delete old model editions. The entire history of the project is always preserved.

- Use Production Data Locations: Although deploying open model editions is a useful feature in development for updating quickly a model edition, it is not recommended to perform updates on data location that host production data, and it is not recommended to use development data locations for production. The best practice is to have Production Data Locations that only allow deploying closed model edition for production data.

- Import/Export for Remote Deployment: It is possible to export and import model from both deployment and development repositories. Importing a model edition is possible in a Deployment Repository if this edition is closed.

- Avoid Skipping Editions: When importing successive model editions, it is not recommended to skip intermediate editions, as it is not possible import them at a later time. For example, if importing edition 0.1 of a model, then importing edition 0.4, the intermediate editions - 0.2 and 0.3 - can longer be imported in this repository.

Detailed Model Lifecycle

This section describes the detailed model development and deployment lifecycle.

Initial Setup and Deployment in Development

The following steps are required when creating a new model in a development environment.

- Model managers or designers create a new model. This operation creates also the first edition of the model.

- Designers create the first iteration of the model, including the logical model, the certification process rules and the applications.

- Designers run a validation when the model is stable and ready for the first tests.

- Model manager or designers create a development data location, using the model edition, to deploy and test the model.

The model is deployed and ready for testing. Integration specialists can load data into the data location and use the generated data management applications to view and manage the data.

Making Changes in Development

After the first development round, designers will repeatedly make changes to the model edition and test them in the development environment.

To test these changes in the development data location:

- Designers run a model validation to make sure that the model is valid.

- Designers or model managers deploy the model edition again, replacing the existing model edition by an updated one (with the same version number).

The updated model is immediately ready for testing. After the update:

- Integration specialists shoud consider running the data loads for the updated jobs to reprocess the incoming data as needed.

- Application testers should make sure to click the Refresh option in the application’s user menu (in the upper right corner of the application) to force a full refresh of the run-time application.

Releasing the Model

When the model is complete and tested, it is ready for release.

To release a model:

- Designers or model managers close the model edition. This operation freezes the current model edition and opens a new one.

- Model managers deploy the closed model edition using one of the following methods:

- Deploy to the same repository: Deploy the closed model edition into a production data location attached to the same repository.

- Deploy to a different repository: Export this model edition for an import then deployment to a remote repository.

Developing Iteratively after a Release

When you close a model edition, a new model edition (for example, with version number [0.1]) is automatically created.

You can proceed with your next project iteration, starting with this new model edition:

- Model designers make changes to this model edition in development until the next project iteration is ready for release.

- When ready, model managers release this model edition.

If fixes are required on a previously released model edition, model managers can branch this old model edition, modify, then release it.

Working with Model Editions and Branches

Creating a New Model

Creating a new model creates the model with the first model branch and model edition.

To create a new model:

- Open the the Model Design view by clicking the Design button in the Application Builder header.

- If you are connected to a model edition, click the

Switch Model button to close the connected model edition.

Switch Model button to close the connected model edition. - In the Model Design view, click the New Model… link. The Create New Model wizard opens.

- In the Create New Model wizard, check the Auto Fill option and then enter the following values:

- Name: Internal name of the object.

- Label: User-friendly label for this object. Note that as the Auto Fill box is checked, the Label is automatically filled in. Modifying this label is optional.

- In the Description field, optionally enter a description for the Model.

- Click Finish to close the wizard. The new model is created, and opened in the Model Design view.

Closing and Creating a New Model Edition

This operation closes the latest open model edition in a branch and opens a new one. The closed model edition is frozen and can no longer be edited, but can be deployed to production environments.

To close and create a new edition:

- In the Management view, expand the Model Administration node, then expand the model and the model branch containing the edition that you want to close.

- Right-click the latest model edition of the branch (indicated as opened) and select Close and Create New Edition.

- Click OK to confirm closing the model edition.

- The Enter a comment for this new model edition dialog, enter a comment for the new model edition. This comment should explain why this new edition was created.

- Click OK. The model is validated, then a new model edition is created and opened in the Model Design view.

Branching a Model Edition

Branching a model edition enables restarting and modifying a closed edition of a model. Branching creates a new branch based on a given edition, and opens a first edition of this branch.

<model_name>_root is created with the first model edition.To create a new branch:

- In the Management view, expand the Model Administration node, then expand the model and the model branch containing the edition that you want to branch from.

- Right-click the closed edition from which you want to branch from and select Create Model Branch From this Edition. The Create New Model Branch wizard opens.

- In the Create New Model Branch wizard, check the Auto Fill option and then enter the following values:

- Name: Internal name of the object.

- Label: User-friendly label for this object. Note that as the Auto Fill box is checked, the Label is automatically filled in. Modifying this label is optional.

- In the Description field, optionally enter a description for the Model Branch.

- Click Finish to close the wizard.

- In the Model Branch Created dialog, click Yes to open the first edition of this new branch.

- The newly created edition opens in the Model Design view.

Target Technology

A model is designed for a given database Target Technology (Oracle, PostgreSQL or SQL Server). Although most of the model content is technology-agnostic, the artifacts generated in the data location schema, as well as the certification process, will use capabilities specific to that technology.

When you create a model, it is automatically configured for the technology of the repository into which it is created. When you design the model, some of the capabilities, for example, the database functions available in SemQL, will depend on that technology.

You can configure the Target Technology value in the Model editor.

Make sure to check the model’s target technology when you start working with a model, or when you import a model from another repository. If you change this technology later, make sure to validate the model in order to have a list of possible issues due to the technology change.

Model Localization

When designing a model, labels, descriptions and other user-facing text strings, are entered to provide a user-friendly experience. These strings are natively externalized in Semarchy xDM, and can be translated (localized) in any language.

A user connecting an application created with Semarchy xDM will see these strings (label of the entities, attributes, list of values, etc.) translated in the locale of his web browser if such translation is available. If no translation is available in his locale for a given text, the default string (for example, the label or description specified in the model) is used. These default strings are the base translation.

Translation Bundles

Strings translation is performed using Translation Bundles, attached to model editions. A translation bundle is a properties file that contains a list of key/value pairs corresponding to the strings localized in a given locale. The translation bundle file is named translations_<locale>.properties, where <locale> is the locale of the translation.

The following example is a sample of a translation bundle file for the English language (translations_en.properties). In this file, the label for the Employee entity is the string Staff Member, and its description is A person who works for our company.

... Entity.Employee.Label=Staff Member Entity.Employee.Description=A person who works for our company. Attribute.Employee.FirstName.Label=First Name Attribute.Employee.FirstName.Description=First name of the employee Attribute.Employee.Picture.Label=<New Key TODO> ...

Translating a Model

To translate a model:

- The translation bundles are exported for the language(s) requiring translation in a single zip file.

- Each translation bundle is translated by a translator using his translation tooling.

- The translated bundles are re-imported into the model edition (either each file at a time, or as a single zip file).

To export translation bundles:

- In the Management view, expand the Model Administration node, then expand the model and the model branch containing the edition that you want to localize.

- Right-click and then select Export Translation Bundles…. The Export Translation Bundles wizard opens.

- Select the languages you want to translate.

- Select Export Base Bundle if you also want to export the base bundle for reference. The base bundle contains the default strings, and cannot be translated.

- Select the Encoding for the exported bundles. Note that the encoding should be UTF-8 unless the language you want to translate or the translation tooling has other encoding requirements.

- Select in Values to Export the export type:

- All exports all the keys with their current translated value. If a key is not translated yet, the value exported is the one specified by the Default Values option.

- New keys only exports only the keys not translated yet.

- All except removed keys exports all keys available, excluding those with no corresponding objects in the model. For example, the key for the description of an attribute that was deleted from a previous model edition will not be exported.

- Select in Default Values the value to set for new keys (keys with no translation in the language).

- Use values for base bundle sets the value to the base bundle value.

- Use the defined tag sets the value to the tag specified in the field at the right of the selection (defaults to

<New Key TODO>). - Leave Empty set the value to an empty string.

- Click OK to download the translation bundles in a zip format and then Close to close the wizard.

To import translation bundles:

- In the Management view, expand the Model Administration node, then expand the model and the model branch containing the edition that you want to localize.

- Right-click and then select Import Translation Bundles…. The Import Translation Bundles wizard opens.

- Click the Open button and select the translation file to import. This file should be either a properties file named

translations_<locale>.propertiesor a zip file containing several of these properties files. - In the Language to Import table, select the language translations you want to import.

- Select the Encoding for the import. Note that this encoding should correspond to the encoding of the properties files you import.

- Select Cleanup Removed Keys During Import if you want to remove the translations for the keys that are no longer used in the model. This cleanup removes translations no longer used by the model.

- Click Finish to run the import.

The translations for the model edition in the selected languages are updated with those contained in the translation bundles. If the Cleanup Removed Keys During Import was selected, translations in these languages no longer used in the model are removed.

Translation and Model Edition Lifecycles

The lifecycle of the translations is entirely decoupled from the model edition and deployment lifecycle:

- It is possible to modify the translations of open or closed model editions, including deployed model editions in production data locations.

- Translation changes on deployed model editions are taken into account dynamically when a user accesses an application defined in this model edition.

Deployment

This process is the deployment in a run-time environment (for production or development) of a model designed with its certification process.

Introduction to Deployment

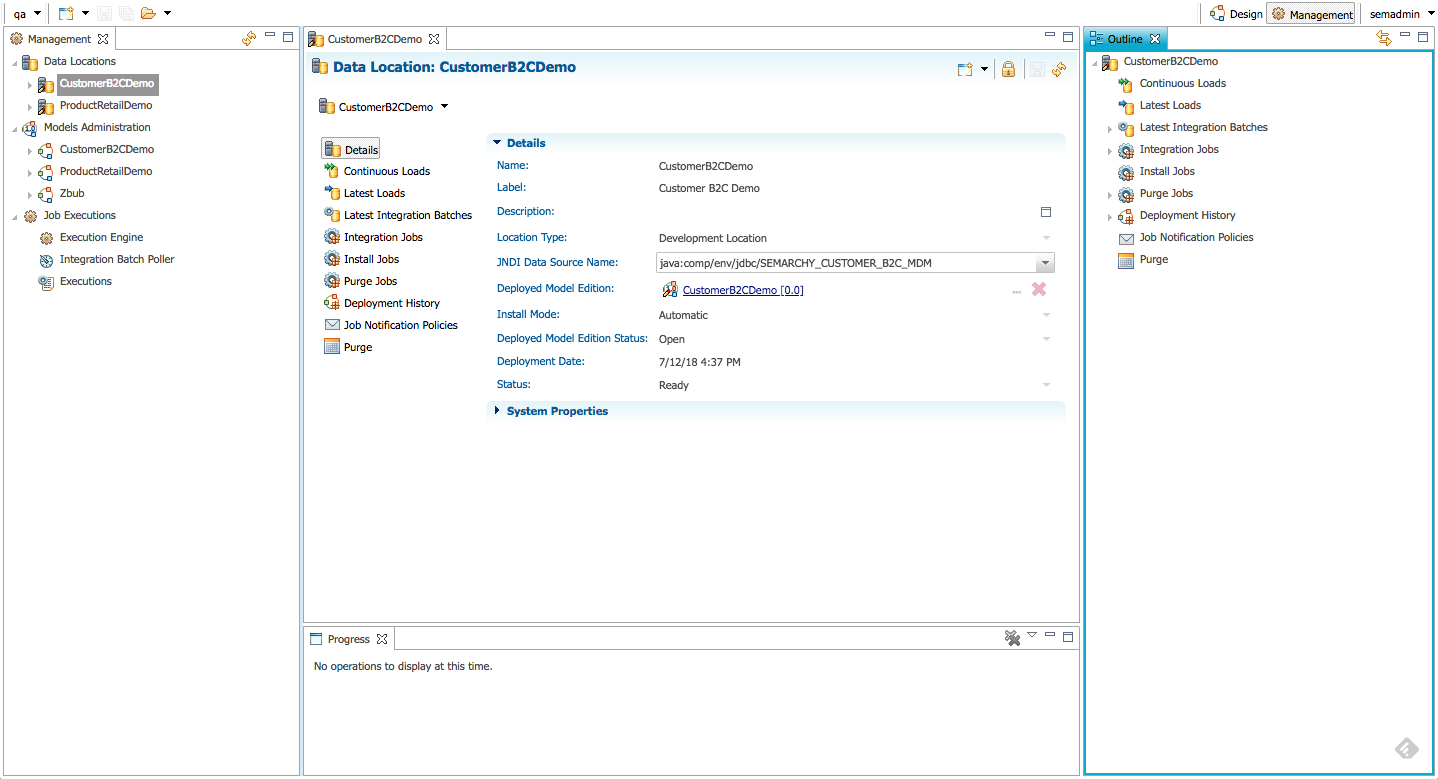

Deployment consists in deploying a Model Edition in a Data Location (a database schema accessed via a JDBC datasource).

Once this model edition is deployed, it is possible to load, access and manage data in the data location using the applications defined in the model.

In this process, the following components are involved:

- A Data Location is a database schema into which successive Model Editions will be deployed. This data location is declared in Semarchy xDM, and uses a JDBC datasource defined in the application server.

- In a data location, a Deployed Model Edition is a model version deployed at a given time in a data location. As an model evolves over time, for example to include new entities or functional areas, new model editions are created then deployed. The Deployment History tracks the successive model editions deployed in the data location.

In the deployment process, you can maintain as many data locations as you want in Semarchy xDM, but a data location is always attached to one repository. You can deploy successive model editions into a data location, but only the latest model edition deployed is active in the data location.

Data Location Types

There are two types of data locations. The type is selected when the data location is created and cannot be changed afterwards:

The data location types are:

- Development Data Location: A data location of this type supports deploying open or closed model editions. This type of data location is suitable for testing models in development and quality assurance environments.

- Production Data Location: A data location of this type supports deploying only closed model editions. This type of data location is suitable for deploying data hubs in production environments.

Data Location Contents

A Data Location contains the hub data, stored in the schema accessed using the data location’s datasource. This schema contains database tables and other objects generated from the model edition.

The data location also refers three type of jobs (stored in the repository):

- Installation Jobs: The jobs for creating or modifying, in a non-destructive way, the data structures in the schema.

- Integration Jobs: The jobs for certifying data in these data structures, according to the model job definitions.

- Purge Jobs: The jobs for purging the logs and data history according to the retention policies.

Creating a Data Location

To create a new data location:

- In the Management view, right-click the Data Locations node and select New Data Location. The Create New Data Location wizard opens.

- In the Create New Data Location wizard, check the Auto Fill option and then enter the following values:

- Name: Internal name of the object.

- Label: User-friendly label for this object. Note that as the Auto Fill box is checked, the Label is automatically filled in. Modifying this label is optional.

- JNDI Datasource Name: Select the JDBC datasource pointing to the schema that will host the data location.

- In the Description field, optionally enter a description for the Data Location.

- Select the Location Type for this data location.

- Select the Deployed Model Edition: This model edition is the first one deployed in the data location.

- Click Finish to close the wizard. The data location is created and the first model edition deploys.

To delete a data location:

- In the Management view, expand the Data Locations node, right-click the data location and select Delete. The Delete Data Location wizard opens. In this wizard, you only delete the data location definition in Semarchy xDM. Deleting the data stored in the data location is optional.

- If you do not want to delete all the data in the data location schema, click Finish. The data location is deleted but the data is preserved.

- If you want to delete all the data in the data location schema:

- Select the Drop the content of the database schema to delete the content of the schema. Note that with this option, you choose to delete all the data stored in the hub, which cannot be undone.

- Click Next. The wizard lists the objects that will be dropped from the schema.

- In the next wizard step, enter

DROPto confirm the choice, and then click Finish. The data location as well as the schema content are deleted.

Deploying a Model Edition

After the initial model edition is deployed, it is possible to deploy other model editions. This single mechanism is used for example to:

- Update the deployed model edition with the latest changes performed in an open model edition.

- Deploy a new closed model version to a production or test environment.

- Revert a deployed model edition to a previous edition.

To deploy a model edition:

- In the Management view, expand the Data Locations node, right-click the data location node and select Deploy Model Edition….

- If you have unsaved editors, select those to save when prompted.

- In the The Deploy Model Edition wizard, select in the Deployed Model Edition the model edition you want to deploy.

- Leave the Generate Job Definition option checked to generate new integration jobs.

- Click Next. The changes to perform on the data location, to support this new model edition, are computed. A second page shows the SQL script to run on the schema to deploy this model edition.

- Click Finish to run the script and close the wizard.

The model edition deploys the jobs first and then runs the SQL code to create or modify the database objects. You can follow this second operation in the Console view at the bottom of the Application Builder.

The new model edition is deployed and the previous model deployment appears under the Deployment History node in the data location.

Another use case for not deploying the job definition is when you know that the new and old job definitions are similar and you want to preserve the existing job logs.

USR_. Semarchy always ignores objects named with this prefix.After multiple deployments, you may decide to remove old elements in the Deployment History.

To remove historized deployments:

- In the Management view, expand the Deployment History node under the data location.

- Select one or many historized deployments (hold Shift for multiple selection).

- Right-click and select Delete.

The selected historized deployments are deleted.

Advanced Deployment Techniques

Moving Models at Design-Time

At design-time, it is possible to move models from one repository to another design repository using Export/Import:

- Export is allowed from a design repository, but also from a deployment repository.

- Import is possible in a design repository, either:

- to create a new model from the import

- or to overwrite an existing open model edition.

Exporting a Model Edition

Export a model edition to download an XML file which may be imported into another repository.

To export a model edition:

- In the Management view of the Management perspective, expand the Model Administration node, then expand the model and the model branch containing the edition that you want to export.

- Select the model edition you want to export, right click and select Export Model Edition….

- In the Model Edition Export dialog, select an Encoding for the export file.

- Click OK to download the export file on your local file system.

- Click Close.

Importing to a New Model

To import and create a new model:

- Open the the Model Design view by clicking the Design button in the Application Builder header.

- If you are connected to a model edition, click the

Switch Model button to close the connected model edition.

Switch Model button to close the connected model edition. - In the Model Design view, click the New Model from import... The Import to a New Model wizard opens.

- Click the Open button and select the export file.

- Click Finish to perform the import. The newly imported model opens in the Model Design view.

Importing on an Existing Model

To import and replace an existing model:

- In the Management view, expand the Model Administration node, then expand the model and the model branch containing the edition that you want to replace.

- Select the open model edition you want to replace, right click and select Import Replace…. The Import-Replace Model Edition wizard opens.

- Click the Open button and select the export file.

- Click Finish to perform the import.

- Click OK to confirm the deletion of the existing model.

The existing model edition is replaced by the content of the export file.

Deploying to a Remote Location

Frequently, the deployment environment is separated from the development environment. For example, the development/QA and production sites are located on different networks or locations. In such cases, it is necessary to use export and import to transfer the model edition before performing the deployment in production.

A remote deployment consists in moving a closed model edition:

- From a design repository or a deployment repository used for Testing/UAT purposes;

- To a deployment repository.

Remote Deployment Architecture

In this configuration, two repositories are created instead of one:

- A Design repository for the development and QA site, with Development data locations attached to this repository.

- A Deployment repository for the production site. Production data locations are attached to this repository.

The process for deploying a model edition in this configuration is given below:

- The model edition is closed in the design repository.

- The closed model edition is exported from the design repository to an export file.

- The closed model edition is imported from the export file into the deployment repository.

- The closed model edition is deployed from the deployment repository into a production data location.

Exporting a Model Edition

To export a model edition:

- In the Management view, expand the Model Administration node, then expand the model and the model branch containing the edition that you want to export.

- Select the closed model edition you want to export, right click and select Export Model Edition.

- In the Model Edition Export dialog, select an Encoding for the export file.

- Click OK to download the export file on your local file system.

- Click Close.

Importing a Model Edition in a Deployment Repository

To import a model edition in a deployment repository:

- Open the Model Design perspective.

- Select the Import Model Edition link. The Import Model Editions wizard opens.

- Click the Open button and select the export file.

- Click Next.

- Review the content of the Import Preview page and then click Finish.

Elements not Exported with the Model Edition

When exporting then importing a model to a different repository, note that some elements are not included in the model and need to be reconfigured in the remote environment. These elements are listed below.

Elements used by the model and applications:

- Applications Common Configuration

- Image Libraries

- Installed Plug-ins

- Role definitions

- Variable Value Providers

Elements not used by the model, but required for operations:

- Notification Servers

- Notification Policies

- Continuous Loads

- Purge Schedules

- Batch Poller Configuration

Data Location Status

A data location status is:

- Ready: A data location in this status can be accessed in read/write mode, accepts incoming batches and processes its current batches.

- Maintenance: A data location in this status cannot be accessed. It does not accept incoming batches but completes its current batches. New loads cannot be initialized and existing loads cannot be submitted. Continous loads stop processing incoming loads and keep them on hold.

When moving a data location to a Maintenance status, the currently processed batches continue until completion. Loads submitted after the data location is moved to Maintenance will fail. They can be kept open and submitted later, when the data location is restored to a ready status.

Changing a Data Location Status

To set a data location status to maintenance:

- In the Management view, expand the Data Locations node, right-click the data location and select Set Status to Maintenance.

- Click OK in the confirm dialog.

The data location is moved to Maintenance mode.

To set a data location status to ready:

- In the Management view, expand the Data Locations node, right-click the data location and select Set Status to Ready.

The data location is moved to a ready state.

Using the Maintenance Mode

The Maintenance status can be used to perform maintenance tasks on the data locations.

For example, if you want to move the data location to a model edition with data structure changes that mandate manual DML commands to be issued on the hub tables, you may perform the following sequence:

- Move the data location to Maintenance mode.

- Let the currently running batches complete. No batch can be submitted to this edition.

- Deploy the new model edition.

- Perform your DML commands.

- Move the data location from Maintenance to the Ready status. Batches can now be submitted to this data location.

Using this sequence, you prevent batches being submitted while the hub is in Maintenance.

The data location is automatically set to maintenance when deploying a model edition and then automatically reverted to ready state.

Managing Execution

An Integration Job is a job executed by Semarchy xDM to integrate and certify source data into golden records. This job is generated from the rules (enrichment, validation, etc) defined in the model and deployed with the model edition.

The Execution Engine processes the integration jobs submitted to the Integration Batch Poller when new data or data changes are published to the data location. The engine also process maintenance jobs such as the Deployment Jobs and Purge Jobs.

Understanding Jobs, Queues and Logs

Jobs are processing units that run in the execution engine. There are three main types of jobs running in the execution engine:

- Integration Jobs that process incoming batches to perform golden data certification.

- Deployment Jobs that deploy new model editions in data locations.

- Purge Jobs: The jobs for purging the logs and data history according to the retention policies.

Jobs are processed in the engine’s Queues. Queues work in First-In-First-Out (FIFO) mode. When a job runs in the queue, the next jobs are queued and wait for their turn to run. To run two jobs in parallel, it is necessary to distribute them into different queues.

Queues are grouped into Clusters. There is one cluster per Data Location, named after the data location.

System Queues and Clusters

Specific queues and cluster exist for administrative jobs:

- For each data location cluster, a specific System Queue called SEM_SYS_QUEUE is automatically created. This queue is used to run administrative operations for the data location. For example, this queue processes the deployment jobs updating the data structures in the data location.

- A specific System Cluster cluster called SEM_SYS_CLUSTER, which contains a single SEM_SYS_QUEUE queue, is used to run platform-level maintenance operations.

Job Priority

As a general rule, integration jobs are processed in their queues, and have the same priority.

There are two exceptions to this rule:

- Jobs updating the data location, principally model edition Deployment Jobs.

- Platform Maintenance Jobs that updating the entire platform.

Model Edition Deployment Job

When a new model edition is deployed and requires data structure changes, DDL commands are issued as part of a job called

DB Install<model edition name>. This job is launched in the in the SEM_SYS_QUEUE queue of the data location cluster.

This job modifies the tables used by the DML statements from the integration jobs. As a consequence, it needs to run while no integration job runs. This job takes precedence over all other queued jobs in the cluster, which means that:

- Jobs currently running in the cluster are completed normally.

- All the queues in the cluster, except the SEM_SYS_QUEUE are moved to a BLOCKED status. Queued jobs remain in the queue and are no longer executed.

- The model edition deployment job is executed in the SEM_SYS_QUEUE.

- When this job is finished, the other queues return to the READY status and resume the processing of their queued jobs.

This execution model guarantees a minimum downtime of the integration activity while avoiding conflicts between integration jobs and model edition deployment.

Platform Maintenance Job

If a job is queued in the SEM_SYS_CLUSTER/SEM_SYS_QUEUE queue, it takes precedence over all other queued jobs in the execution engine.

This means that:

- Jobs currently running in all the clusters are completed.

- All the clusters and queues except the SEM_SYS_CLUSTER/SEM_SYS_QUEUE are moved to a BLOCKED status. Queued jobs are no longer executed in these queues/clusters.

- The job in the in the SEM_SYS_CLUSTER/SEM_SYS_QUEUE is executed.

- When this job is finished, the other queues are moved to the READY status and resume the processing of their queued jobs.

This execution model guarantees a minimal disruption of the platform activity while avoiding conflicts between the platform activity and maintenance operations.

Queue Behavior on Error

When a job running in a queue encounters a run-time error, it behaves differently depending on the queue configuration:

- If the queue is configured to Suspend on Error, the job hangs on the error point, and blocks the rest of the queued jobs. This job can be resumed when the cause of the error is fixed, or can be canceled by user choice.

- If the queue is not configured to Suspend on Error, the job fails automatically and the next jobs in the queue are executed. The failed job cannot be restarted.

Suspending a job on error is the preferred configuration under the following assumptions:

- All the data in a batch needs to be integrated as one single atomic

operation.

For example, due to referential integrity, it is not possible to integrate contacts without customers and vice versa. Suspending the job guarantees that it can be continued - after fixing the cause of the error - with the data location preserved in the same state. - Batches and integration jobs are submitted in a precise sequence

that represents the changes in the source, and need to be processed in

the order they were submitted.

For example, missing a data value change in the suspended batch that may impact the consolidation of future batches. Suspending the job guarantees that the jobs are processed in their exact submission sequence, and no batch is skipped without an explicit user choice.

There may be some cases when this behavior can be changed:

- If the batches/jobs do not have strong integrity or sequencing requirement, then they can be skipped on error by default. These jobs can run in a queue where Suspend on Error is disabled.

- If the integration velocity is critical for making golden data available as quickly as possible, it is possible to configure the queue running the integration job with Suspend on Error disabled.

Queue Status

A queue is in one the following statuses:

- READY: The queue is available for processing jobs.

- SUSPENDED: The queue is blocked because a job has encountered an error or was suspended by the user. This job remains suspended. Queued jobs are not processed until the queues becomes READY again, either when the job is cancelled or finishes successfully. For more information, see the Troubleshooting Errors section.

- BLOCKED: When a job is running in the SEM_SYS_QUEUE queue of the cluster, the other queues are moved to this status. Jobs cannot be executed in a blocked queue and remain queued until the queue becomes READY again.

A cluster can be in one the following statuses:

- READY: The cluster is not blocked by the SEM_SYS_CLUSTER cluster, and queues under this cluster can process jobs.

- BLOCKED: The cluster is blocked when a job is running in the SEM_SYS_CLUSTER cluster. When a cluster is blocked, all its attached queues are also blocked.

Managing the Execution Engine and the Queues

Accessing the Execution Engine

To access the execution engine:

- In the Management view, expand the Job Executions node and double-click the Execution Engine node. The Execution Engine editor opens.

The Execution Engine Editor

This editor displays the list of queues grouped by clusters. Queue currently pending on suspended jobs appear in red.

The list of queues and clusters displays the following information:

- Cluster/Queue Name: the name of the cluster or queue.

- Status: Status of the queue or cluster. A queue can be either READY, SUSPENDED or BLOCKED. A cluster may be in a BLOCKED or READY status.

- Queued Jobs: For a queue, the number of jobs queued in this queue. For a cluster number of jobs queued in all the queues of this cluster.

- Running Jobs: For a queue, the number of jobs running in this queue (1 or 0). For a cluster, the number of jobs running in all the queues of this cluster.

- Suspend on Error: Defines the behavior of the queue on job error. See the Troubleshooting Errors section for more information.

From the Execution Engine editor, you can perform the following operations:

Stopping and Starting the Execution Engine

To stop and start the execution engine:

- In the Management view, expand the Job Executions node and double-click the Execution Engine node. The Execution Engine editor opens.

- Use the

Stop this component and

Stop this component and  Start this component buttons in the editor’s toolbar to stop and start the execution engine.

Start this component buttons in the editor’s toolbar to stop and start the execution engine.

Changing the Queue Behavior on Error

To change the queue behavior on error:

- In the Management view, expand the Job Executions node and double-click the Execution Engine node. The Execution Engine editor opens.

- Select or de-select the Suspend on Error option for a queue to set its behavior on error or on a cluster to set the behavior of all queues in this cluster.

- Press CTRL+S to save the configuration. This configuration is immediately active.

Opening an Execution Console for a Queue

The execution console provides the details of the activity of a given queue. This information is useful to monitor the activity of jobs running in the queue, and to troubleshoot errors.

To open the execution console:

- In the Management view, expand the Job Executions node and double-click the Execution Engine node. The execution engine editor appears.

- Select the queue, right-click and select Open Execution Console.

The Console view for this queue opens. Note that it is possible to open multiple execution consoles to monitor the activity of multiple queues.

In the Console view toolbar you have access to the following operations:

- The

Close Console button closes the current console. The consoles for the other queues remain open.

Close Console button closes the current console. The consoles for the other queues remain open. - The

Clear Console button clears the content of the current console.

Clear Console button clears the content of the current console. - The

Display Selected Log button allows you to select one of the execution consoles currently open.

Display Selected Log button allows you to select one of the execution consoles currently open.

Suspending a Job Running in a Queue

To restart a suspended job in a queue:

- In the Management view, expand the Job Executions node and double-click the Execution Engine node. The execution engine editor appears.

- Select the queue that contains one Running Job.

- Right-click and then select Suspend Job.

The job is suspending and the queue switches to the SUSPENDED status.

Restarting a Suspended Job in a Queue

To restart a suspended job in a queue:

- In the Management view, expand the Job Executions node and double-click the Execution Engine node. The execution engine editor appears. The suspended queue appears in red.

- Select the suspended queue.

- Right-click and then select Restart Job.

The job restarts from the failed step. If the execution console for this queue is open, the details of the execution are shown in the Console.

Canceling a Suspended Job in a Queue

To cancel a suspended job in a queue:

- In the Management view, expand the Job Executions node and double-click the Execution Engine node. The execution engine editor appears. The suspended queue appears in red.

- Select the suspended queue.

- Right-click and then select Cancel Job.

The job is canceled, the queue become READY and starts processing queued jobs.

In the job logs, this job appears in Error status.

Managing the Integration Batch Poller

The Integration Batch Poller polls the integration batches submitted to the platform, and starts the integration jobs on the execution engine. The polling action is performed on a schedule configured in the batch poller.

Stopping and Starting the Integration Batch Poller

To stop and start the integration batch poller:

- In the Management view, expand the Job Executions node and double-click the Integration Batch Poller node. The Integration Batch Poller editor opens.

- Use the

Stop this component and

Stop this component and

Start this component buttons in the

editor’s toolbar to stop and start the integration batch poller.

Start this component buttons in the

editor’s toolbar to stop and start the integration batch poller.

Configuring the Integration Batch Poller

The integration batch poller configuration determines the frequency at which submitted batches are picked up for processing.

To configure the integration batch poller:

- In the Management view, expand the Job Executions node and double-click the Integration Batch Poller node.

- In the Integration Batch Poller editor, choose in the

Configuration section the polling frequency:

- Weekly at a given day and time.

- Daily at a given time.

- Hourly at a given time.

- Every n second.

- With a Cron Expression.

- Weekly at a given day and time.

- Press CTRL+S to save the configuration.

In the Advanced section, set optionally the following logging parameters:

- Job Log Level: Select the logging level that you want for the jobs:

- No Logging disables all logging. Jobs and tasks are no longer traced in the job log. Job restartability is not possible. This level is not recommended.

- No Tasks only logs job information, and not the task details. This mode supports job restartability.

- Exclude Skipped Tasks (default) logs job information and task details, except for the tasks that are skipped.

- Include All Tasks logs job information and all task details.

- Execution Monitor Log Level: Logging level [1…3] for the execution console for all the queues.

- Enable Conditional Execution: A task may be executed or skipped depending on a condition set on the task. For example, a task may be skipped depending on parameters passed to the job. Disabling this option prevents conditional executions and forces the engine to process all the tasks.

Managing Jobs Logs

The job logs display the jobs being executed or executed in the past by the execution engine. Reviewing the job logs allows you to monitor the activity of these jobs and troubleshoot execution errors.

Accessing the Job Logs

To access the logs:

- In the Management view, expand the Job Executions node and double-click the Executions node.

- The Job Logs editor opens.

The Job Logs Editor

From this editor you can review the job execution logs and drill down into these logs.

The following actions are available from the Job Logs editor toolbar.

- Use the

Refresh button to refresh the view.

Refresh button to refresh the view. - Use the

Auto Fit Column Width button to adjust the size of the columns.

Auto Fit Column Width button to adjust the size of the columns. - Use the

Apply and Manage User-Defined Filters button to filter the log. See the

Filtering the Logs section for more information.

Apply and Manage User-Defined Filters button to filter the log. See the

Filtering the Logs section for more information. - Use the

Purge Selection button to delete the entries selected in the job logs table. See the Purging the Logs section for more information.

Purge Selection button to delete the entries selected in the job logs table. See the Purging the Logs section for more information. - Use the

Purge using a Filter button to purge logs using an existing or a new filter. See the Purging the Logs section for more information.

Purge using a Filter button to purge logs using an existing or a new filter. See the Purging the Logs section for more information.

Drilling Down into the Logs

The Job Logs editor displays the log list. This view includes:

- The Name, Start Date, End Date and Duration of the job as well as the name of its creator (Created By).

- The Message returned by the job execution. This message is empty if the job is successful.

- The rows statistics for the Job:

- Row Count: Sum of all the Select, Insert, etc metrics.

- Insert Count, Update Count, Deleted Count: number of rows selected, inserted, updated, deleted, merged as part of this job.

To drill down into the logs:

- Double-click on a log entry in the Job Logs editor.

- The Job Log editor open. It displays all he information available in the job logs list, plus:

- The Job Definition: This link opens the job definition for this log.

- The Job Log Parameters: The startup parameters for this job. For example, the Batch ID and Load ID.

- The Current Task information, if the job is still running.

- The Tasks: In this list, task groups are displayed with the statistics for this integration job instance.

- The Job Definition: This link opens the job definition for this log.

- Double-Click on task group in the Tasks list to drill down into sub-task groups or task.

- Click on the Task Definition link to open the definition of a task.

By drilling down into the task groups down to the task, it is possible to monitor the activity of a job, and review in the definition the executed code or plug-in.

Filtering the Logs

To create a job log filter:

- In the Job Logs editor, click the

Apply and Manage User-Defined Filters button and then select Search. The Define Filter dialog opens.

Apply and Manage User-Defined Filters button and then select Search. The Define Filter dialog opens. - Provide the filtering criteria:

- Job Name: Name of the job. Use the

_and%wildcards to represent one or any number of characters. - Created By: Name of the job creator. Use the

_and%wildcards to represent one or any number of characters. - Status: Select the list of job statuses included in the filter.

- Only Include: Check this option to limit the filter to the logs before/after a certain number of executions or a certain point in time. Note that the time considered is the job start time.

- Job Name: Name of the job. Use the

- Click the Save as Preferred Filter option and enter a filter name to save this filter.

Saved filters appear when you click the Apply and Manage User-Defined Filters button.

You can enable of disable a filter by marking it as active or inactive from this menu. You can also use the Apply All and Apply None to enable/disable all saved filters.

To manage job log filters:

- Click the

Apply and Manage User-Defined Filters button, then select Manage Filters. The Manage User Filters editor opens.

Apply and Manage User-Defined Filters button, then select Manage Filters. The Manage User Filters editor opens. - From this editor, you can add, delete or edit a filter, and enable disable filters for the current view.

- Click Finish to apply your changes.

Purging the Logs

You can purge selected job logs or all job logs returned by a filter.

To purge selected job logs:

- In the Job Logs editor, select the job logs you want to purge. Press the CTRL key to select multiple lines or the SHIFT key to select a range of lines.

- Click the

Purge Selection button.

Purge Selection button. - Click OK in the confirmation window.

The selected job logs are deleted.

To purge filtered job logs:

- In the Job Logs editor, click the

Purge using a Filter button.

Purge using a Filter button.- To use an existing filter:

- Select the Use Existing Filter option.

- Select a filter from the list and then press Finish.

- Select the Use Existing Filter option.

- To create a new filter:

- Select the Define New Filter option and then click Next.

- Provide the filter parameters, as explained in the Filtering the Logs section and then click Finish.

- Select the Define New Filter option and then click Next.

- To purge all logs (no filter):

- Select the Purge All Logs (No Filter) option and then click Finish.

- To use an existing filter:

The jobs logs are purged.

Troubleshooting Errors

When a job fails, depending on the configuration of the queue into which this job runs, it is either in a Suspended or Error status.

The status of the job defines the possible actions on this job.

You have several capabilities in Semarchy xDM to help you troubleshooting issues. You can drill down in the erroneous task to identify the issue or restart the job with the Execution Console activated

To troubleshoot an error:

- Open the Job Logs.

- Double-click the log entry marked as

Suspended or in

Suspended or in  Error.

Error. - Drill down into the Task Log, as explained in the Drilling Down into the Logs section.

- In the Task Log, review the Message.

- Click the Task Definition link to open the task definition and review the SQL Statements involved, or the plug-in called in this task.

- If it is possible to immediately identify the cause of the job failure, fix it and if possible restart the suspended job.

- If the cause cannot be identified immediately, open the execution console for this queue and restart the suspended job. Use the console to troubleshoot the job.

- If is possible to safely abort the job, you can cancel the job.

Configuring Data Locations

In addition of the deployed model editions and the job execution logs, the data locations also contains the configuration of:

- The Continuous Loads, used by integration specialists to push data into the data location in a continuous way.

- The Job Notifications Policies, sent under certain conditions when an integration job completes for administration, monitoring, or integration automation purposes.

- The Data Purge Schedule, to reduce the data location storage volume by pruning the history of data changes and job logs.

This chapter explains how to configure these items.

Configuring Continuous Loads

Continuous loads enable integration developers to push data into the data location in a continuous way without having to take care of Load Initialization or Load Submission.

With continuous loads:

- Integration developers do not need to initialize and submit individual external loads. They directly load data into the hub using the Load ID or Name of the continuous load.

- At regular intervals, Semarchy xDM automatically creates then submits an external load with the data loaded in the continuous load. This external load is submitted with a program name, a job, and a submitter name.

- The continuous load remains, with the same Load ID and Name. Subsequent data loads made with this continuous load are processed at the next interval.

Continuous loads are configured and managed by the data location manager. Unlike external loads, they cannot be created, submitted or canceled via integration points.

To configure a continuous load:

- In the Management view, expand the Data Locations node, then expand the data location for which you want to configure a continuous load.

- Right-click the Continuous Loads node and select New Continuous Load. The Create New Continuous Load wizard opens.

- Enter the following values:

- Active: Check this option to make the continuous load active. Only active loads integrate data at a regular interval.

- Name: Name of the continuous load. This name is used to uniquely identify this load.

- Program Name: This value is for information only. It describes the submitted external loads.

- On Submit Job: Integration job submitted with the external loads. This job is selected among those available in the deployed model edition.

- Submit Interval: Interval in second between submissions.

- Submit as: name of the user submitting the external loads. This user may or may not be a user defined in the security realm of the application server.

- Click Finish to close the wizard. The Continuous Load editor opens.

- In the Description field, optionally enter a description for this load.

- Press CTRL+S to save the editor.

Note the Load ID and Name values of the continuous load as you will need them for integrating data using this load.

The data location manager can deactivate a continuous load to prevent it from processing its data.

To activate or deactivate continuous loads:

- In the Management view, expand the Data Locations node, then expand the data location for which you want to configure a continuous load.

- Double-click the Continuous Loads node. The Data Location editor opens on the Continuous Loads tab.

- Select one or more continuous loads in the list, and then click the Activate or Deactivate button in the toolbar.

- Press CTRL+S to save the editor.

Configuring Job Notifications Policies

Notifications tell users or applications when a job completes or when operations are performed into workflows, for example, when task is assigned to a role.

There are two types of notifications:

- Job Notifications issued under certain conditions when an integration job completes. These notifications are used for administration, monitoring, or integration automation. These notifications are configured with Notification Policies in the data locations.

- Workflow Notifications are emails sent to users when operations are performed in a workflow. They are configured in workflow transitions and tasks.

Both families of Notifications are issued via Notification Servers.

Notifications Servers Types

Notifications recipients may be users or systems. The type of notification sent as well as the recipient depends on the type of notification server configured.

Each notification server uses a Notification Plug-in that:

- defines the configuration parameters for the notification server,

- defines the configuration and form of the notification,

- sends the notifications via the notification servers.

Semarchy xDM is provided with several built-in notification plug-ins:

- JavaMail: The notification is sent in the form of an email via a Mail Session server configured in the application server, and referenced in the notification server. For more information about configuring Mail Session, see the Semarchy xDM Installation Guide.

- SMTP: The notification is sent in the form of an email via a SMTP server entirely configured in the notification server.

- File: The notification is issued as text in a file stored in a local directory or in a FTP/SFTP file server.

- HTTP: The notification is issued as a GET or POST request sent to a remote HTTP server. Use this server type to call a web service with the notification information.

- JMS: The notification is issued as a JMS message in a message queue.

A single notification server having either the JavaMail or SMTP type can be used to send Workflow Notifications. This server is flagged as the Workflow Notification Server

Any servers can be used to send Job Notifications. Each Job Notification Policy specifies the notification server it uses.

Configuring Notification Servers

Notification servers are configured at platform-level. Refer to the Configuring Notification Servers section in the Semarchy xDM Administration Guide for more details about notification servers configuration.

Configuring a Job Notification Policy

With a notification server configured, it is possible to create notification policies using this server.

To create a notification policy:

- In the Management view, expand the Data Locations node, then expand the data location for which you want to configure the policy.

- Right-click the Job Notification Policies node and select

New Job

Notification Policy. The Create New Job Notification Policy wizard

opens.

New Job

Notification Policy. The Create New Job Notification Policy wizard

opens. - In the first wizard page, enter the following information:

- Name: Internal name of the notification policy.

- Label: User-friendly label for the notification policy. Note that as the Auto Fill box is checked, the Label is automatically filled in. Modifying this label is optional.

- Notification Server: Select the notification server that will be used to send these email notifications.

- Use Complex Condition: Check this option to use a freeform Groovy Condition. Leave it unchecked to define the condition using a form.

- Click Next.

- Define the job notification condition. This condition apply to a completing job.

- If you have checked the Use Complex Condition option, enter the Groovy Condition that must be true to issue the notification. See Groovy Condition for more information.

- If you have not checked the Use Complex Condition option, use the form to define the condition to issue the notification.

- Job Name Pattern: Name of the job. Use the

_and%wildcards to represent one or any number of characters. - Notify on Failure: Select this option to send notification when a job fails or is suspended.

- Notify on Success: Select this option to send notification when a job completes successfully.

- … Count Threshold: Select the maximum number of errors, inserts, etc. allowed before a notification is sent.

If you define a Job Name Pattern, Notify on Failure and a Threshold, a notification is sent if a job matching the pattern fails or to reaches the threshold.

- Job Name Pattern: Name of the job. Use the

- Click Next.

- Define the job notification Payload. This payload is a text content, but you can use Groovy also to programmatically generate it. See Groovy Template for more information.

This payload has a different purpose depending on the type of notification:- JavaMail or SMTP: The body of the email

- File: the content written to the target file.

- JMS: the payload of the JMS message.

- HTTP: The content of a POST request.

- Click Next.

- Define the Notification Properties. These properties depend on the type of notification server:

- JavaMail or SMTP:

- Subject: Subject of the email. The subject may be a Groovy Template

- To, CC: List of recipients of this email. These recipients are roles. Each of these roles points to a list of email addresses.

- Content Type: Email content type. For example:

text/html,text/plain. This content type must correspond to the generated payload.

- File:

- Path: Path of the file in the file system. The path may be a Groovy Template. Make sure to use only forward slashes

/for this path. Note that this path is a relative path from the Notification Server’s Root Path location. For example, if you set the Path to/newand the Notification Server Root Path to/work/notifications, then the notification files are stored in the/work/notifications/newfolder. - Append: Check this option to append the payload to the file. Otherwise, the file is overwritten.

- Charset: Charset used for writing the file. Typically UTF-8, UTF-16 or ISO-8859-1.

- File Name: Name of the file to write. the file name may be a Groovy Template.

- Root Path: Provide the root path for storing the notification file.

- Path: Path of the file in the file system. The path may be a Groovy Template. Make sure to use only forward slashes

- HTTP:

- Method: HTTP request method (POST or GET)

- Request Path: Path of the request in the HTTP server. The request path may be a Groovy Template

- Parameters: HTTP Parameters passed to the request in the form a list of

property=valuepairs separated by a&character. If no parameter is passed and the method isGET, all the notification properties are passed as parameters. The parameters may be a Groovy Template - Headers: HTTP Parameters passed to the request as

header=valuepairs, with one header per line. - Content Type: Content type of the payload. For example:

text/html,text/plain. This content type must correspond to the generated payload. - Failure Regexp: If the server returns an HTTP Code 200, the response payload is parsed with this regular expression. If the entire payload matches this expression, then the notification is considered failed. For example, to detect the NOTIFICATION FAILED string in the payload, the Failure Regexp value should be

(.*)NOTIFICATION FAILED(.*).

- JMS:

- JMS Destination: JNDI URL of the JMS topic or queue. The URL is typically

java:comp/env/jms/queue/MyQueueif a queue factory is declared asjms/queue/MyQueuein the application server. The destination may be a Groovy Template - Message Type: Type of JMS Message sent:

TextMessage,MapMessageorMessage. See Message Types for more information. When using aMapMessage, the payload is ignored and all properties are passed in the MapMessage. - Set Message Properties: Check this option to automatically set all notification properties as message properties. Passing properties in this form simplifies message filtering.

- JMS Destination: JNDI URL of the JMS topic or queue. The URL is typically

- JavaMail or SMTP:

- Press CTRL-S to save the configuration.

Using Groovy for Notifications

The Groovy scripting language is used to customize the notification. See http://groovy-lang.org/documentation.html for more information about this language.

Groovy Condition

When using a complex condition for triggering the notification, the condition is expressed in the form of a Groovy expression that returns true or false. If this condition is true, then the notification is triggered.

This condition may use properties of the job that completes. Each property is available as a Groovy variable.

The available properties are described in the Job Notification Properties section.

You can use the  Edit Expression button and open the condition editor.

Edit Expression button and open the condition editor.

In the condition editor:

- Double-click one of the Properties in the list to add it to the condition.

- Click the Test button to test the condition against the notification properties provided in the Test Values tab.

- In the Test Values tab, if you enter an existing Batch ID and click the > button, the properties from this batch are retrieved as test values.

Sample conditions are given below: