Welcome to Semarchy xDM.

This guide contains information about publishing and consuming data in an MDM hub generated by Semarchy xDM.

Preface

Overview

Using this guide, you will:

- Understand the Integration Component and Jobs of Semarchy xDM.

- Learn how to publish data into an MDM hub through an external load.

- Learn how to consume data from an MDM hub.

- Learn how to interact with applications and workflows programmatically.

Audience

Document Conventions

This document uses the following formatting conventions:

| Convention | Meaning |

|---|---|

boldface | Boldface type indicates graphical user interface elements associated with an action, or a product specific term or concept. |

italic | Italic type indicates special emphasis or placeholder variable that you need to provide. |

| Monospace type indicates code example, text or commands that you enter. |

Other Semarchy Resources

In addition to the product manuals, Semarchy provides other resources available on its web site: https://www.semarchy.com.

Obtaining Help

There are many ways to access the Semarchy Technical Support. You can call or email our global Technical Support Center (support@semarchy.com). For more information, see https://www.semarchy.com.

Feedback

We welcome your comments and suggestions on the quality and usefulness

of this documentation.

If you find any error or have any suggestion for improvement, please

mail support@semarchy.com and indicate the title of the documentation

along with the chapter, section, and page number, if available. Please

let us know if you want a reply.

Introduction to Semarchy xDM

Semarchy xDM is the Intelligent Data Hub platform for Master Data

Management (MDM), Reference Data Management (RDM), Application Data Management

(ADM), Data Quality, and Data Governance.

It provides all the features for data

quality, data validation, data matching, de-duplication, data authoring,

workflows, and more.

Semarchy xDM brings extreme agility for defining and implementing data management applications and releasing them to production. The platform can be used as the target deployment point for all the data in the enterprise or in conjunction with existing data hubs to contribute to data transparency and quality.

Its powerful and intuitive environment covers all use cases for setting up a successful data governance strategy.

Introduction to Integration

Integration Capabilities

Semarchy xDM certifies golden data from source applications' data and allows applications to consume this golden data.

Integration with Semarchy xDM is performed in several ways:

- Publishing source data in an MDM hub deployed by Semarchy xDM, and have the hub certify golden records from this data.

- Consuming golden or master data from the MDM hub.

- Interacting with Workflows Instances to manage data.

Integration Methods

Semarchy xDM provides several integration services, including:

- REST API, accessible from the Semarchy xDM application.

- SQL Interfaces, that is a set of functions stored in the repository schema, plus a set of tables stored in the Data Location Schema.

Using these components, applications and middleware products can consume and publish data in the hub.

Integration Concepts

Publishers and Consumers

In the context of integration, we refer to applications publishing source data into the hub as Publishers.

We also refer to application consuming golden data from the hub as Consumers.

These roles are not exclusive. An application can publish data into the MDM hub and consume certified golden data from the hub to update its records.

For example, a business intelligence application is typically a consumer-only as it consumes only golden records for reporting purposes. An operational application may publish its contact information to the MDM hub for certification and update its contact information with the certified golden records.

Publishers

This term refers to the original application from which the data originates, and not necessarily to the middleware tool or user-designed program actually doing the data movement. The publisher is typically an operational application such as a Customer Relationship Management (CRM), a Product Lifecycle Management (PLM) or an Enterprise Resource Planner (ERP).

A middleware tool may be an Extract-Transform-Load (ETL), Enterprise Service Bus (ESB) or any other data integration product. It may also be a user-designed program or script implemented in SQL, Java, etc. The middleware tool or user-designed program communicates with the publisher to extract data and communicates with the MDM Hub using the publishing methods described in this guide to load this data.

Consumers

Similarly to the publisher, this term refers to the applications consuming data from the hub. This consumption usually takes place via a middleware tool or a user-designed program, and uses the consumption methods described in this guide.

Data Consumption

Data consumption is available via the REST API or SQL through the tables stored in the Data Location Schema.

Various views on the data can be used for consumption, including:

- Golden Data enriched, standardized, validated, de-duplicated and certified in the hub.

- Master Data pushed in the hub by source systems.

- Errors raised by records pushed in the hub when violating the data quality rules defined in the model.

Data Consumption is always done on a specific Data Location.

Data Publishing

Publishing Concepts

Publishing source data for certification into golden data is performed as a transaction. Such publisher transaction is called an External Load. It is a Semarchy xDM transaction identified by a sequential Load ID.

An External Load represents a source data load transaction.

When an External Load is submitted with an Integration Job Name, a Batch - identified by a Batch ID - is created, and the Job starts processing the data published in this load.

A Batch represents a transaction certifying loaded data and writing in the hub the resulting golden data.

External Load Lifecycle

An external load lifecycle is described below:

- Initialize the External Load

- The middleware uses the SQL Interface or the REST API to initialize an external load.

- It receives from the platform a Load ID identifying the external load.

- At that stage, an external load transaction is open with the platform.

- Load Data

- The middleware inserts data into the landing tables in the data location schema. This done using the SQL Interface or the REST API.

- When loading data, the middleware provides both the Load ID and a Publisher Code corresponding to the publisher application.

- Submit the External Load

- The middleware uses the SQL Interface or the REST API to submit the external load.

- It provides the Load ID as well as the name of the Integration Job to trigger with this submission.

- The platform creates a Batch to process the data published in this external load.

- It receives from the platform a Batch ID identifying the batch that is processed by the platform for this external load.

- At that stage, the external load transaction is closed.

The middleware can also Cancel the External Load to abort the external load instead of submitting it.

Continuous Loads

Continuous loads enable integration developers to push data into the MDM hub in a continuous way without having to take care of Load Initialization or Load Submission.

With continuous loads:

- Integration developers do not need to initialize and submit individual external loads. They directly load data into the hub using the Load ID or Name of the continuous load.

- At regular intervals, Semarchy xDM automatically creates then submits an external load with the data loaded in the continuous load. This external load is submitted with a program name, a job, and a submitter name.

- The continuous load remains, with the same Load ID and Name. Subsequent data loads made with this continuous load are processed at the next interval.

Configuring Continuous Loads

Continuous loads are configured and managed by the administrator in a data location. Unlike external loads, they cannot be created, submitted or canceled via integration points.

Using Continuous Loads

The SQL Interface or the REST API can use continuous loads via their Load ID or Name to push data into the hub without having to perform the load ID management operation.

- The REST API calls support both the Load ID or the Name of the continuous load in their URL.

- The SQL interface exposes tables with a

B_LOADIDcolumn to load the Load ID of the continuous load.

It provides a utility function calledGET_CONTINUOUS_LOAD, stored in the repository (and in theINTEGRATION_LOADpackage for Oracle), to returns the Load ID for a given continuous load name.

vLoad_id := <repository_schema>.INTEGRATION_LOAD.GET_CONTINUOUS_LOADID(

'<continuous_load_name>' /* Name of the continuous load. */

);vLoad_id := <repository_schema>.get_continuous_loadid(

'<continuous_load_name>' /* Name of the continuous load. */

);EXEC <repository_schema>.dbo.GET_CONTINUOUS_LOADID

@LOAD_ID OUTPUT,

@P_CONTINUOUS_LOAD_NAME = '<continuous_load_name>' /* Name of the continuous load. */Batch Lifecycle

When an external load is submitted, the following operations take place:

- The platform creates a batch and returns to the submitter the Batch ID

- The integration batch poller picks up the batch on its schedule:

- It creates a Job instance using the Job Definition which name is provided in the submit action.

- It moves the job into the Queue specified in the job definition

- The Execution engine processes this job in the queue.

- When the job completes, the batch is considered finished.

Even when multiple loads take place simultaneously, the sequence into which the external loads are submitted defines the order into which the data is processed by the integration jobs and golden data certified from this source data.

Interfaces for Integration

This section describes the interfaces available for integration.

REST API

This interface is available for application to consume or publish data from the hub in a programmatic way.

SQL Interface

This interface is available for data integration and ETL products to publish or consume data. It is composed of the Integration Load Functions and the Data Location Database Schema.

The Integration Load Functions are stored in the repository schema, contains function to manage the External Load Lifecycle, for the purpose of publishing data in batch mode.

The Data Location Database Schema stores a set of tables that contain the hub data.

This schema contains the landing (or staging) tables used to publish data into the hub. It contains also the golden records tables and the intermediate tables handled by the integration job that create golden

records from the source records. There is a single data structure for the entire hub, and a single set of tables for each entity regardless of the model edition.

The data location schema is accessed for integration purposes to:

- Publish data in batch mode in the landing tables.

- Consume data from the golden data and master data tables.

The structure of the tables stored in the data location schema is detailed in the Table Structures section.

Data Certification

This section explains the artifacts involved when certify data published into the hub.

Integration Job

The integration job processes the data submitted in an external load and runs this data through the Certification Process, which is a series of steps to create and certify golden data out of this source data.

This job is generated from the certification rules defined at design time, and it uses the data structures automatically created in the MDM hub when deploying the model edition.

An integration job is a sequence of tasks used to certify golden data for a group of entities. The model edition deployed in the data location brings several integration jobs definitions with it. Each of these job definitions is designed to certify data for a group of entities.

Integration jobs definitions as well as integration job logs are stored in the repository

For example, a multi-domain hub contains entities for the PARTY domain and for the PRODUCTS domain, and has two integration jobs definition:

- INTEGRATE_CUSTOMERS certifies data for the Party, Location, etc… entities.

- INTEGRATE_PRODUCTS certifies data for the Brand, Product, Part, etc… entities.

Integration jobs are started when source data has been loaded in the landing tables and is submitted for golden data certification.

Each integration job is the implementation of the overall certification process template. It may contain all or some of the steps of this process. The following section details the structure of the certification process.

Certification Process

Introduction to the Certification Process

The Certification Process creates consolidated and certified Golden Records from various sources:

- Source Records, pushed into the hub by middleware systems on behalf of upstream applications (known as the Publishers).

Depending on the type of the entity, these records are either converted to golden records directly (basic entities) or matched and consolidated into golden records (ID and fuzzy matched entities). When matched and consolidated, these records are referred to as Master Records. The golden records they have contributed to create are referred to as Master Based golden records. - Source Authoring Record, authored by users in the MDM applications.

When a user authors data in an MDM application, depending on the entity type and the application design, he performs one of the following operations:- He creates new golden records or updates existing golden records that exist only for the hub, and do not exist in any of the publishers. These records are referred to as Data Entry Based golden records This pattern is allowed for all entities, but basic entities support only this pattern.

- He creates or updates master records on behalf of publishers, submitting these records to matching and consolidation. This pattern is allowed only for ID and fuzzy matched entities.

- He overrides golden values resulting from the consolidation of records pushed by publishers. This pattern is allowed only for ID and fuzzy matched entities.

- Delete Operations, made by users on golden and master records from entities with delete enabled.

- Matching Decisions, taken by data stewards for fuzzy matched entities, using duplicates managers. Such decisions include confirming, merging or splitting groups of matching records as well as accepting/rejecting suggestions.

The certification process takes these various sources, applies the rules and constraints defined in the model in order to create, update or delete the golden data that business users browse using the MDM applications and that downstream application consume from the hub.

This process is automated and involves several phases, automatically generated from the rules and constraints, which are defined in the model based on the functional knowledge of the entities and the publishers involved.

The following sections describe the details of the certification process for ID, fuzzy matched and basic entities, and the delete process for all entities.

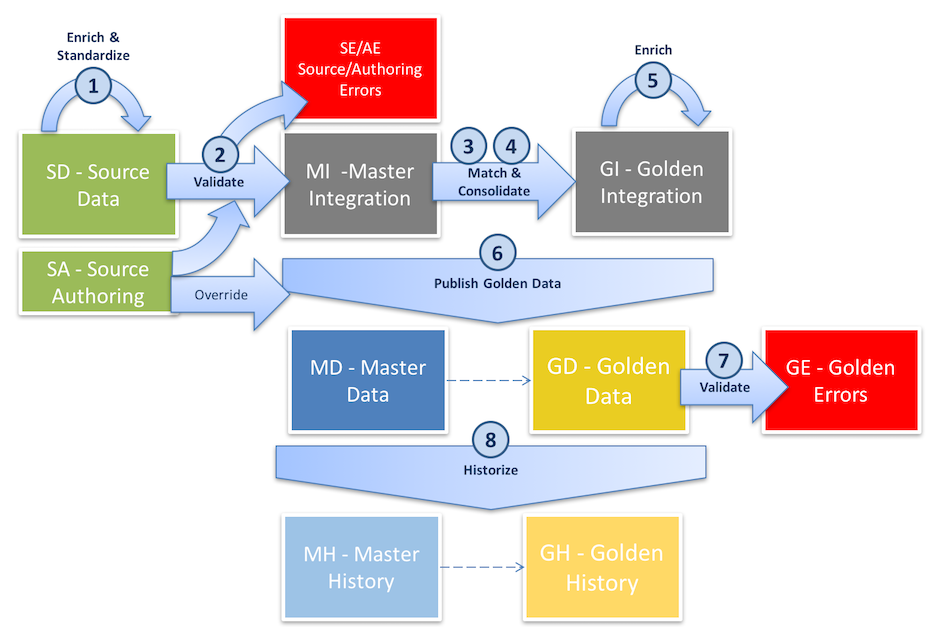

Certification Process for ID and Fuzzy Matched Entities

The following figure describes the certification process and the various Table Structures involved in this process.

The certification process involves the following steps:

- Enrich and Standardize Source Data: Source Authoring Records (in the SA tables) created or updated on behalf of publishers and Source Records (in the SD tables) are enriched and standardized using the SemQL and API (Java Plug-in or REST Client) Enrichers, executed Pre-Consolidation.

- Validate Source Data: The enriched and standardized records are checked against the various Constraints executed Pre-Consolidation. Erroneous records are ignored for the rest of the processing and the errors are logged

into the SE - source errors - and AE - authoring errors - tables.

Note that source authoring records are enriched and validated only for basic entities. For ID and fuzzy matched, source authoring records are not enriched and validated.

- Match and Find Duplicates: For fuzzy matched entities, this step matches pairs of records using a Matcher and creates groups of matching records (match groups). For ID matched entities, matching is simply made on the ID value.

The matcher works as follows:- It runs a set of Match Rules. Each rule has two phases: first, a binning phase creates small bins of records. Then a matching phase compares each pair of records within these small bins to detects duplicates.

- Each match rule has a Match Score that expresses how strongly the pair of records matches. A pair of records that match according to one or more rules is given the highest Match Score of all these rules. Match pairs with scores and rules are stored in the DU table.

- When a match group is created, an overall Confidence Score is computed for that group. According to this score, the group is marked as a suggestion or immediately merged, and possibly confirmed. These automated actions are configured in the Merge Policy and Auto-Confirm Policy of the matcher.

- Matching Decisions taken by users on match groups (stored in the UM table) are applied at that point, superseding the matcher’s choices.

- Consolidate Data: This step consolidates match group duplicates into single consolidated records. The Consolidation Rules created in the Survivorship Rules defines how the attributes consolidate. Integration master records and integration golden (consolidated) records are stored at that stage in the GI and MI tables

- Enrich Consolidated Data: The SemQL and API (Java Plug-in or REST Client) Enrichers executed Post-Consolidation run to standardize or add data to the consolidated records.

- Publish Certified Golden Data: This step finally publishes the Golden Records for consumption.

The final master and golden records are stored in the GD and MD tables

- This step applies possible overrides from Source Authoring Record (Involving the SA, SF, GA and GF tables) , according to the Override Rules defined in the Survivorship Rules.

- This step also creates or updates Data Entry Based golden records (that exist only in the MDM), from Source Authoring Records.

- Validate Golden Data: The quality of the golden records is checked against the various Constraints executed on golden records (Post-Consolidation). Note that unlike the pre-consolidation validation, it does not remove erroneous golden records from the flow but flags them as erroneous. The errors are also logged (in the GE tables) .

- Historize Data: Golden and master data changes are added to their history (stored in the GH and MH tables) if historization is enabled.

Source Authoring Records are not enriched or validated for ID and fuzzy matched entities as part of the certification process. These records should be enriched and validated as part of the steppers into which users author the data.

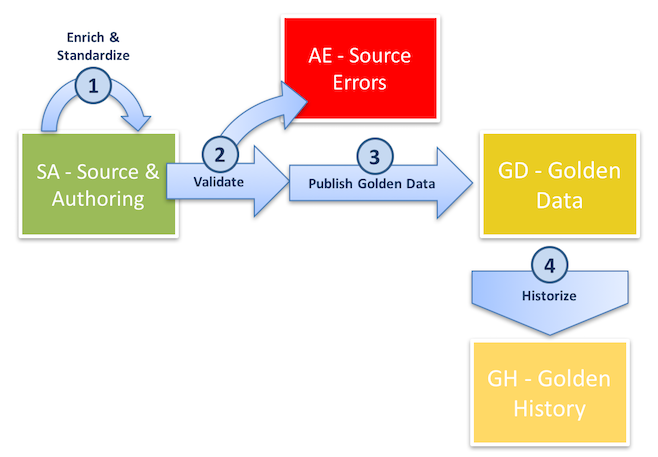

Certification Process for Basic Entities

The following figure describes the certification process and the various Table Structures involved in this process.

The certification process involves the following steps:

- Enrich and Standardize Source Data: During this step, the Source Records and Source Authoring Records (both stored in the SA tables) are enriched and standardized using SemQL and API (Java Plug-in and REST Client) Enrichers executed Pre-Consolidation.

- Validate Source Data: The quality of the enriched source data is checked against the various Constraints executed Pre-Consolidation. Erroneous records are ignored for the rest of the processing and the errors are logged (in the AE tables) .

- Publish Certified Golden Data: This step finally publishes the Golden Records for consumption (in the GD tables) .

- Historize Data: Golden data changes are added to their history (stored in the GH table) if historization is enabled.

Note that:

- Basic entities do not separate Source Records from Source Authoring Records. Both follow the same process.

- Source data for basic entities does not pass through enrichers or validations executed post-consolidation.

Deletion Process

A Delete Operation (for basic, ID or fuzzy matched entities) on a golden record involves the following steps:

- Propagate through Cascade: Extends the deletion to the child records directly or indirectly related to the deleted ones with a Cascade configuration for Delete Propagation.

- Propagate through Nullify: Nullifies child records related to the deleted ones with a Nullify configuration for the Delete Propagation.

- Compute Restrictions: Removes from deletion the records having related child records and a Restrict configuration for the Delete Propagation. If restrictions are found, the entire delete is canceled as a whole.

- Propagate Delete to owned Master Records to propagate deletion to the master records attached to deleted golden records. This step only applies to ID and fuzzy matched entities.

- Publish Deletion: Tracks record deletion (in the GX and MX tables), with the record values for soft deletes only, and then removes the records from the golden and master data (in the GD and MD tables). When doing a hard delete, this step deletes any trace of the records in every table (SA, SD, UM, MH, GH, etc.). The only trace of a hard delete is the ID (without data) of the deleted master and golden records, in the GX and MX tables. Deletes are tracked in the history for golden and master records (in the MH and GH tables), if historization is configured.

For more information about these various phases and how the certification process is designed, refer to the Certification Process Design chapter in the Semarchy xDM Developer’s Guide.

Table Structures

This section describes the general structure of the tables involved in the certification process.

Tables

For each entity, a pre-defined set of tables is created. These tables are named with the following convention: <Prefix>_<Physical Table Name>

The prefix is automatically generated depending on the table nature (Source Data, Source Error, etc.), and the Physical Table Name is set in the entity definition.

The following list describes the tables created for a given entity.

| Table | Name | Applicable Entity Type | Access | Description |

|---|---|---|---|---|

| Source Data | Fuzzy and ID Matched | Read/Write | This table contains source data loaded by publishers, using the middleware. |

| Source Authoring | All | Read (Write for Basic Entities) | For matching entities, this table contains both the records created in the hub by the user as well as possible overrides performed on consolidated records. In that case, it is associated to a |

| Source Errors | All | Read | This table contains the errors detected during the pre-consolidation validation phase. It is named |

| Duplicates | Fuzzy Matched | Read | This table stores references to pairs of records detected as duplicates in the matching phase, with the match rule and match score. |

| User Matches | Fuzzy Matched | Read/Write | This table contains the master records that have been manually re-grouped by users using duplicate managers. You can use this table to perform similar operations programmatically. See the Managing Duplicates Using SQL section for examples of duplicate management operations you can perform using this table. |

| Master Integration | Fuzzy and ID Matched | Internal | This table contains the records that have passed the pre-consolidation validation phase and that have been grouped by the matching phase. |

| Master Data | Fuzzy and ID Matched | Read | This table contains the master records, which are the enriched and validated source records that have participated in the creation of golden records. This table can be accessed to review groups/duplicates or to refer to the source data. |

| Deleted Master | Fuzzy and ID Matched | Read | This table contains the deleted master records logs, plus the deleted data for soft deletes. |

| Master History | Fuzzy and ID Matched | Read | This table contains the master records history. |

| Golden Integration | Fuzzy and ID Matched | Internal | This table contains the records that have been consolidated but not yet passed through the post-consolidation validation phase. |

| Golden Authoring | Fuzzy and ID Matched | Internal | This table contains data overrides performed on golden records . It is associated to a |

| Deleted Golden | All | Read | This table contains the deleted golden records logs, plus the deleted data for soft deletes. |

| Golden History | All | Read | This table contains the golden records history. |

| Golden Data | All | Read | This table contains the golden records, including those flagged as erroneous by post-consolidation validations. |

| Golden Errors | Fuzzy and ID Matched | Read | This table contains the errors detected during the post-consolidation validation phase on golden records. |

For publishing and consuming data, internal tables are not needed. The structure of these tables is not explained in this document.

Columns

Attribute Columns

Attributes appear in the tables' structure as follows:

- Attributes using list of values, built-in and user-defined types are mapped to single columns with a database type and length corresponding to the attribute type. The column name for such a simple attribute is the Physical Column Name value specified in the simple attribute definition.

- Complex attributes are mapped on columns named with the following

convention:

<Complex Attribute Physical Prefix><Definition Attribute Physical Column Name>

For example: The Country (COUNTRY) and City (CITY) definition

attributes of the Geocoded Address complex attribute (Prefix: GEO)

will be mapped to columns named GEOCOUNTRY and GEOCITY.

Built-in Columns

In addition to the attribute columns, built-in columns are added to the tables' structure, and are used in the certification process.

They track for example:

- Batch information:

B_BATCHID - Cause of a rejected record:

B_CONSTRAINTNAME,B_CONSTRAINTTYPE - Class information:

B_CLASSNAME - Match/Merge information:

B_MATCHGRP,B_CONFSCORE,B_HASSUGGMERGE,B_SUGGMERGEID,B_SUGGMERGECONFSCORE,B_SUGGMERGEMASTERSCOUNT,B_CONFIRMATIONSTATUS,B_MASTERSCOUNT,B_ISCONFIRMED - Delete operation information:

B_DELETETYPE,B_DELETEDATE,B_DELETEAUTHOR,B_DELETEOPERATION,

The following list describes these columns.

| Column Name | Exists in Tables | Datatype | Description |

|---|---|---|---|

| GA, SA |

| For matched entities, type of authoring operation:

|

| AE, GA, GD, GE, GF, GI, GP, GX, MD, MI, MX, SE |

| ID of the batch into which the new data, data changes, overrides or duplicate decisions were applied, or during which errors were detected. |

| UM |

| Cause that made the record part of the duplicate management transaction. Possible causes are:

|

| AE, GA, GD, GE, GF, GH, GI, GP, GX, MD, MH, MI, MX, SA, SD, SE, SF, UM |

| Unique name of class / entity to which this record belongs. See Class Name for more information. |

| GD, GH, GI, GX, MD, MH, MI, MX, UM |

| Confirmation status for duplicate management:

|

| MD, MI, UM | Varies | ID of the confirmed golden record this master record is attached to. |

| GD, GH, GI, GX, UM |

| Confidence Score of the golden record. It is the average of the match scores in the match group. |

| AE, GE, SE |

| For error records, name of the constraint causing this error. |

| AE, GE, SE |

| For error records, type of the constraint causing this error. |

| SA |

| ID of the original record copied into this record. |

| DU, GA, GD, GH, GI, GX, MD, MH, MI, MX, SA, SD, UM |

| For golden data (GD, GI, GX, GH), the submitter of the batch into which for record was created. For source and master data, the user who has created the record in a workflow, stepper, duplicate manager or a user name loaded by the data integration process. |

| DU, GA, GD, GH, GI, GX, MD, MH, MI, MX, SA, SD, UM |

| For golden and master data, the submit timestamp of the batch into which the record was created. For source data (SD, SA, UM), the timestamp at which the source record was created. |

| GX, MX, SA, SD |

| Author of the delete operation. |

| GX, MX, SA, SD |

| Deletion timestamp of a record |

| GX, MX, SA, SD |

| Delete operation ID. |

| GX, MX, SA, SD |

| Cascade path through which the record was reached during a delete. Null for record directly selected for deletion. |

| GX, MX, SA, SD |

| Delete Type ( |

| GD, GH, GX, SA, SD |

| Error Status of a record. This value indicates whether the source or golden record has passed successfully or not validations. Possible values are:

|

| GH, MH |

| Batch at which the history record was created. |

| GD, GH, GX |

| For fuzzy matching and ID matching entities, indicates whether the golden record was created and authored only in the MDM ( |

| GD, GH, GX |

| For fuzzy matching and ID matching entities, this flag ( |

| GD, GH, GI, GX, MD, MH, MI, MX, UM |

| Flag ( |

| GD, GH, GI, GX |

| Flag ( |

| AE, SA, SD, SE, SF, UM |

| Load Identifier used as the unique transaction ID for external application pushing data to the platform |

| GD, GH, GI, GX, UM |

| Number of master records contributing to the golden record. |

| MD, MH, MI, MX |

| ID of the match group for the master record. This column is set when matching takes place. |

| DU |

| Match rule having cause the pair of records to match. |

| DU |

| Score of the matched pair. |

| MI |

| Previous identifier of the match group for the master record. |

| MD, MH, MI, MX | Varies | ID of the previous golden record the master record was attached to. |

| SA, SD, UM |

| Batch identifier of the record when it was originally edited out in a stepper or a duplicate manager. |

| UM |

| Original Confirmation Status in a duplicate management operation. |

| UM | Varies | ID of the original confirmed golden record to which the master record was attached to. |

| UM |

| Confidence Score of the original golden in a duplicate management operation. |

| UM |

| Number of master records in the original golden in a duplicate management operation. |

| UM | Varies | ID of the original golden record to which the master record was attached to. |

| UM |

| Original exclusion group to which the master record belonged to. |

| DU, GD, GH, GI, GX, MD, MH, MI, MX, SD, SE, UM |

| For matching entities, code of the publisher that published the record. |

| DU |

| For duplicate pairs, code of the publisher that published the second record. |

| DU, GD, GH, GI, GX, MD, MH, MI, MX, SD, SE, UM |

| ID of the source record in the source publisher system (Fuzzy Matched entities only). See below for a detailed explanation of the primary key options. |

| DU |

| For duplicate pairs, ID of the second source record in the source publisher system (Fuzzy Matched entities only). |

| GD, GH, GI, GX, MD, MH, MI, MX, UM |

| Confidence Score for the suggested match group. |

| GD, GH, GI, GX, MD, MH, MI, MX, UM |

| ID of the merge suggested by the automated matching. |

| GD, GH, GI, GX, MD, MH, MI, MX, UM |

| Number of master records in the suggested merge. |

| GH, MH |

| Batch at which history record stopped being current or null if the records is still current. |

| DU, GA, GD, GH, GI, GX, MD, MH, MI, MX, SA, SD, UM |

| For golden data (GD, GI, GX, GH), the submitter of the batch into which the record was updated. For source and master data, the latest user who has updated the record in a workflow, stepper, duplicate manager or the user name loaded by the data integration process. |

| DU, GA, GD, GH, GI, GX, MD, MH, MI, MX, SA, SD, UM |

| For golden and master data, the submit timestamp of the batch into which the record was updated. For source data (SD, SA, UM), the timestamp at which the source record was created or updated. |

| MD, MH, MI, MX, UM |

| Exclusion group ID. An exclusion group represents a group of records for which a user has taken split decisions. |

Primary Keys Columns

The primary key to load depends on the Entity Type:

Basic

For basic entities, Semarchy xDM uses a single identifier, stored in a column named after the Physical Column Name specified in the primary key attribute definition. This column will exist in all tables. This identifier is simply propagated into the hub from the source records.

ID Matched

When using ID Matching, Semarchy xDM assumes a common identifier across all systems. In this case, this common identifier is stored in a column named after the Physical Column Name specified in the primary key attribute definition.

This column will exist in all tables. When publishing data into the hub, the middleware loads this column with the primary key from the publishing system. This identifier is simply propagated into the hub, and matching is done using this primary key.

Fuzzy Matched

When using Fuzzy Matching, Semarchy xDM assumes no common identifier across publishers. There may be two different records with the same ID in different systems. There is a need to match the records and consolidate them under a golden record having a primary key generated by the system.

In this case, the source identifier is stored in the column named

B_SOURCEID, along with the publisher code stored in the B_PUBID.

This is the case for the SD, SE and MD tables.

When publishing data into the hub, the middleware loads this

B_SOURCEID column with a primary key value from the publishing system.

If this primary key is a composite key in the source system, all the

columns of this composite key must be concatenated into B_SOURCEID.

When the records are consolidated in a golden record (GD and GE tables), a System Defined Primary Key is generated and stored in a column named after the Physical Column Name specified in the primary key attribute definition. This key is referred to as the Golden Record ID.

The MD table makes the bridge between the Source ID and the Golden ID as it contains both these values.

Reference Columns

When a reference exists in the source publisher and need to be expressed in the landing table, this reference mapping in the table structure depends on the Entity Type of the referenced entity.

Reference to a Basic Entity

For a reference to a Basic entity, the referenced key stored is simply the primary key of the basic entity.

As a consequence, the referenced value is stored in a single column. This column is named after the Physical Name

provided in the reference definition and is prefixed with F_. For example, F_COUNTRIES.

Reference to an ID Matched Entity

For a reference to an ID Matched entity, the referenced key is the

same for all systems. As a consequence, the referenced value is stored

in a single column. This column is named after the Physical Name

provided in the reference definition and is prefixed with F_. For

example, F_EMPLOYEE.

For example: if Customer references Employee and this entity uses ID

Matching, SD_CUSTOMER will contain the following information.

| CUSTOMER_NAME | F_EMPLOYEE |

|---|---|

Gadgetron | 11 |

Roxxon | 56 |

This information means that the customer Gadgetron references the

employee number 11, and Roxxon references employee number 56. This

employee number is the same in all systems.

Reference to a Fuzzy Matched Entity

For a reference to a Fuzzy Matched entity, the referenced may point to a master record from a publisher, or directly to a golden record in the hub:

- For a reference to a master record:

- The referenced value is stored in a column named after the Physical

Name provided in the definition of the reference and prefixed with

FS_(for Foreign Source ID). For example,FS_CUSTOMER. - The referenced publisher is also stored, in a column named after the

Physical Name provided in the definition of the reference and prefixed

with

FP_(for Foreign Publisher). For example,FP_CUSTOMER. For a reference to a golden record:

- The referenced value is stored in a column named after the Physical

Name provided in the definition of the reference and prefixed with

- The reference value is stored in a column named after the Physical

Name provided in the definition of the reference and prefixed with

F_(for Foreign ID).

Contact references Customer and this entity uses

Fuzzy Matching, SD_CONTACT contains the following information.

| FIRST_NAME | LAST_NAME | FP_CUSTOMER | FS_CUSTOMER |

|---|---|---|---|

John | Doe | CRM | 1235 |

Jane | Smith | MKT | A3251 |

This information means that the contact John Doe references the

customer with the primary key 1235 in the CRM publisher, and

that Jane Smith references the customer with the primary key

A3251 in the MKT publisher.

Contact references Customer and this entity uses

Fuzzy Matching, SD_CONTACT contains the following information.

| FIRST_NAME | LAST_NAME | F_CUSTOMER |

|---|---|---|

John | Doe | 6598 |

Jane | Smith | 6556 |

This information means that the contact John Doe references the

golden customer record with the golden ID 6598, and

that Jane Smith references the golden customer with the golden ID

6556.

FS_, FP_ and F_ columns.Class Name

Several entities involved in an inheritance relation have their data

stored in the same set of tables. These tables store the superset of the

attributes of the parent and all its child entities. The B_CLASSNAME

column is used to identify the class (entity) of a record in a table.

For example, when Person and Company inherit from the Party

entity, the resulting table is named after the parent entity

(Party), and will contain all the attributes of Person and

Company as well. In the GD_PARTY table for example, records

representing persons will have B_CLASSNAME='Person'. When publishing

person or company information in the SD_PARTY table, the middleware

must set B_CLASSNAME='Person' or B_CLASSNAME='Company' accordingly.

Constraints

The only constraints physically enforced in the tables are the primary keys and the not null columns. These constraints apply to system columns only.

For example, on an SD_ table, the following constraints are enforced:

- primary key on

B_LOADID,B_PUBID,B_SOURCEID(or the primary key column for ID Matched entities) B_LOADID,B_CLASSNAME,B_PUBIDandB_SOURCEID(or the primary key column for ID Matched entities) are not null

Other constraints defined in the model (mandatory attributes, references, etc.) are not enforced in the physical model but checked during the validation phase.

Publishing Data Using SQL

Publishing data is done using function calls to initialize/submit the external load and DML statements to load the data into the landing (SD for matching entities, SA for basic entities) tables.

Overview

In this approach, external loads are handled using the and SQL interface. It works as follows:

- The external load is initialized using a function call to the

GET_NEW_LOADIDfunction on the repository schema. This function call returns a Load ID. - SD (SA for Basic Entities) tables are loaded using SQL inserts issued on the data location schema.

- The external load is submitted or cancelled using function calls on the repository schema:

SUBMIT_LOADto submit a load identified by its Load ID. This function call returns a Batch ID.CANCEL_LOADto cancel a load identified by its Load ID.

Alternately, you can use Continuous Loads to load data into the SD tables with the Load ID of the Continuous Load, without having to explicitly initialize and submit individual external loads.

Initializing a Load

Initializing an external load uses the following parameters:

data_location_name: name of the data location. This data location is the one hosting the MDM hub into which the external load is performed.program_name: This variable is for information only. It is used to identify the middleware performing the external load. For example 'ETL Custom Script'.load_description: This variable is for information only. It is used to describe the nature of this load. For example: 'Daily updates for Customers and Contacts'.user_name: name of the user initializing the external load. This user may or may not be a user defined in the security realm of the application server.

This function call to GET_NEW_LOADID initializes an external load and returns a Load ID. It is performed on the repository database schema.

INTEGRATION_LOAD package. You must prefix the function name with the name of this package.vLoad_id := <repository_schema>.INTEGRATION_LOAD.GET_NEW_LOADID(

'<data_location_name>' /* Data Location Name (as in the UI) */

'<program_name>' /* Informational. Identifies the Middleware*/

'<load_description>' /* Informational. Describes the load. */

'<user_name>' /* User initializing the load. */

);vLoad_id := <repository_schema>.get_new_loadid(

'<data_location_name>' /* Data Location Name (as in the UI) */

'<program_name>' /* Informational. Identifies the Middleware*/

'<load_description>' /* Informational. Describes the load. */

'<user_name>' /* User initializing the load. */

);EXEC <repository_database>.dbo.GET_NEW_LOADID

@LOAD_ID OUTPUT,

@P_DATA_LOCATION_NAME = '<data_location_name>', /* Data Location Name (as in the UI) */

@P_PROGRAM_NAME = '<program_name>', /* Informational. Identifies the Middleware */

@P_LOAD_DESCRIPTION = N'<load_description>',/* Informational. Describes the load. */

/* Note the N to allow accented characters */

@P_USERNAME = '<user_name>' /* User initializing the load. */The following example performs a function call initializing an external load on the CustomerMDMHub data location. The repository schema is REPO. The returned Load ID is stored in the vLoad_id variable.

vLoad_id := REPO.INTEGRATION_LOAD.GET_NEW_LOADID(

'CustomerMDMHub',

'Custom ETL',

'Initial Load for the Hub',

'John Doe' );Loading Data

Loading data consists in inserting new source records in the SD (and SA for Basic Entities) tables for the set of entities taken into account in the integration job.

When loading data in the SD or SA tables:

- You use SQL insert commands or your regular ETL/Data Integration Platform.

- The insert commands are issued on the SD or SA tables stored in the data location schema.

Tables to Load

Make sure to load all the SD and SA tables for the entities that will be taken into account in the integration job. For example, if the integration job processes the Customer and Contact entities, then you should load the

SD_CUSTOMER and SD_CONTACT tables.

If you insert data into the SD table of an entity that is not taken into account by the integration job, this data will be ignored. For example, if the integration job processes the Customer and Contact entities, data loaded the SD_EMPLOYEE table will not be taken into account by the integration job to certify golden records for the Employee entity.

Referential Integrity and Load Order

There is no required order to load the SD and SA tables, as no foreign keys

are implemented on these tables.

Reference validation is performed by the integration job, as a

consequence references between entities must be loaded as indicated in

the Columns to Load section.

Using Transactions

It is recommended to use a database transaction when writing to the SD and SA tables.

- For external loads: Canceling an external load cancels the load but does not delete records from the tables. Writing in an auto-commit transaction in the tables then canceling the load leaves useless information in the tables.

Using a transaction gives you the capability to rollback all changes on the tables when canceling a load. - For continuous loads, it is strongly recommended to use a transaction and commit only when all tables are loaded: as the continuous load consumes data committed in the table on its own schedule, loading tables without a transaction may cause the continuous load to start processing a data batch before it is completed.

Columns to Load

This section provides some guidance for loading the columns of the SD and SA tables.

System Columns (Mandatory)

The following system columns must be loaded as indicated:

B_LOADID: Load this column with the Load ID provided byGET_NEW_LOADIDfunction call, of with the ID of the Continuous Load. Note that you can use theGET_CONTINUOUS_LOADIDfunction to retrieve a continuous load ID from the continuous load name.B_CLASSNAME: Name of the entity (or class) being loaded. When inheritance is used, the same table stores data for all parent and child classes. Set explicitlyB_CLASSNAMEto the name of the entity for which data is being published. For example:Person,Party,Company. See the Class Name section for more details.B_PUBID: Load this column with a Publisher Code for ID Matched and Fuzzy Matched entities. For example:CRM,MKT, etc. This publisher code identifies the publisher (application that publishes the data) and should be declared in the model edition. The list of publisher codes is available by double-clicking the Publishers node in the Model Design view in the Model Design perspective. If the publisher code is unknown to the model, data from this publisher is processed, but this publisher will have the lowest ranking in a Preferred Publisher consolidation strategy.

B_PUBID column.B_PUBID with the publisher code as defined in the model. Publisher codes may contain uppercase letters, digits and underscores.B_PUBID column to identify each publisherPrimary Keys (Mandatory)

For matching entities, the primary key that you load into the SD table allows identifying the

source record from the publisher (identified by Publisher Code B_PUDIB). This primary key will allow taking into account the creation or update of a source record and report through the consolidation process the corresponding data changes to the golden record.

For basic entities, the primary key that you provide in the SA table is the one of the golden record and will allow updating this record.

The primary key column to load depends on the Entity Type of the entity.

Basic Entity

If the entity is basic, then this ID must be loaded in the column representing the attribute defined as the primary key attribute for the entity.

ID Matched Entity

If the entity uses ID Matching, then this ID must be loaded in the column representing the attribute defined as the primary key attribute for the entity.

Fuzzy Matched Entity

If the entity uses Fuzzy Matching, then you must load into the

B_SOURCEID column the value of the primary key from the source system.

If this primary key is a composite key, then you must concatenate the

values of the composite primary key and load them in the B_SOURCEID

column.

B_SOURCEID column is a VARCHAR(128) column. Make sure

to perform the appropriate conversions for loading this column.References (Mandatory)

When loading data for entities that are related by a reference relationship, you must load the referencing entity with the value of the referenced primary key. The columns to load differ depending on the Entity Type of the referenced entity.

Reference to a Basic Entity

If the referenced entity is an Basic entity, then you need to load

the column representing the referencing attribute. This column is

F_<Physical Name of the Reference To Role Name>.

Reference to an ID Matched Entity

If the referenced entity is an ID Matched entity, then you need to load

the column representing the referencing attribute. This column is

F_<Physical Name of the Reference To Role Name>.

For example, if Customer references Employee and this entity uses ID

Matching, then you must load into SD_CUSTOMER the F_EMPLOYEE column

with the primary key of the source employee record referenced by each

customer record.

Reference to a Fuzzy Matched Entity

If the referenced entity is a Fuzzy Matched entity, then you need to load two columns:

FS_<Physical Name of the Referenced To Role Name>: Load this column with the Source ID of the referenced record.FP_<Physical Name of the Referenced To Role Name>: Code of the publisher of the referenced record.

FP_ column with a publisher code and leave FS_

to a null value, and vice versa.For example, if Contact references Customer and this entity use

Fuzzy Matching, you must load the following columns into the SD_CONTACT table:

FP_CUSTOMER: Code of the publisher providing the customer referenced by the given contact, e.g.,MKT.FS_CUSTOMER: Source ID of the customer referenced by the given contact, e.g.,81239.

Attribute Columns

You should load the attribute columns relevant for the entity you are loading.

Make sure to load:

- The attribute columns that make sense for the entity class (

B_CLASSNAME) you are loading. - The mandatory attribute columns. If not, pre-consolidation validation may reject source records with null values.

- The columns for attributes using a list of values type. If these are loaded with values out of the LOV range of values, pre-consolidation validation may reject source records.

Loading Date and Timestamp Columns

Date attributes convert to Date columns in the database. The behavior of these date columns differ depending on the database:

- Dates in PostgreSQL and SQL Server only contain no time or timestamp portion and do not require specific transformation.

- Oracle’s Dates include a time portion. This portion is automatically truncated by the certification job using the

TRUNC(date)function. Integration specialists should be aware of this automated transformation when loading a date and may consider performing this truncation in their integration flows.

Timestamp attributes convert to Timestamp columns that contain a timezone portion. Semarchy xDM stores and expects in the database timestamps in the timezone of the application server hosting the Semarchy xDM application. When loading timestamps, integration specialists should take into account the timezone differences and possible timezone conversion between their integration component and the data location’s database server.

Other Columns (Optional)

The following columns do not need to be loaded or can be optionally loaded:

B_ORIGINALBATCHID: This column is not used for external loads and should not be loaded.B_CREATOR,B_UPDATOR: These columns can be optionally loaded to store the users who have created or updated the records in the source systems. If left null, these columns are automatically set to the name of the user who submits the batch.B_CREDATE,B_UPDDATE: These columns can be optionally loaded to store the creation and update date (timestamp) of the records in the source systems. If left null, these columns are set to the submit date (timestamp) of the batch. Note that values loaded here are not propagated beyond the SD table.

Submitting a Load

Submitting an external load uses the following parameters:

load_id: Load ID returned by the load initialization.integration_job: Name of the integration job to process this load.user_name: name of the user who has initialized the external load. This user may or may not be a user defined in the security realm of the application server.

The SUBMIT_LOAD function call submits an external load identified by its Load ID and returns a Batch ID. It is performed on the repository database schema.

vBatch_id := <repository_schema>.INTEGRATION_LOAD.SUBMIT_LOAD(

<load_id> /* Load ID returned by INTEGRATION_LOAD.GET_NEW_LOADID */

'<integration_job>' /* Name of the Integration Job to trigger. */

'<user_name>' /* User who has initialized the load. */

);vBatch_id := <repository_schema>.submit_load(

<load_id> /* Load ID returned by get_new_loadid */

'<integration_job>' /* Name of the Integration Job to trigger. */

'<user_name>' /* User who has initialized the load. */

);EXEC <repository_database>.dbo.SUBMIT_LOAD

@BATCH_ID OUTPUT,

@P_LOAD_ID = @LOAD_ID,

@P_INTEGRATION_JOB_NAME = '<integration_job>',/* Name of the Integration Job to trigger. */

@P_USERNAME '<user_name>' /* User who has initialized the load. */The following example performs a function call to submit an external load identified by the Load ID 22. It submits it with the job name INTEGRATE_DATA. The repository schema is REPO. The returned Batch ID is stored in the vBatch_id variable.

vBatch_id := REPO.INTEGRATION_LOAD.SUBMIT_LOAD(

22,

'INTEGRATE_DATA',

'John Doe' );Canceling a Load

Canceling a load is performed using the CANCEL_LOAD function with the following parameters:

load_id: Load ID returned by the load initialization.user_name: name of the user who has initialized the external load. This user may or may not be a user defined in the security realm of the application server.

The CANCEL_LOAD procedure cancels an external load identified by its Load ID. It is performed on the repository database schema.

SD_% tables loaded during the external load. This must be taken care of separately.<repository_schema>.INTEGRATION_LOAD.CANCEL_LOAD(

<load_id> /* Load ID returned by INTEGRATION_LOAD.GET_NEW_LOADID */

'<user_name>' /* User who has initialized the load. */

);<repository_schema>.cancel_load(

<load_id> /* Load ID returned by get_new_loadid */

'<user_name>' /* User who has initialized the load. */

);EXEC <repository_database>.dbo.CANCEL_LOAD(

@P_LOAD_ID = @LOAD_ID,

@P_USERNAME = '<user_name>' /* User who has initialized the load. */

);INTEGRATION_LOAD.CANCEL_LOAD to cancel an external load identified by the Load ID 22. (Oracle)REPO.INTEGRATION_LOAD.CANCEL_LOAD(

22,

'John Doe' );Publishing Record Deletions

Semarchy xDM supports records deletion using SQL.

Publishing Golden Records Deletion

Golden record deletion applies to all entities and is similar to deleting a golden record from an application.

To publish a golden record deletion, you must load the following columns into the SA table (even for ID and fuzzy matched entities):

B_LOADID: Load this column with the Load ID provided by theGET_NEW_LOADIDfunction call, or with the ID of the Continuous Load. Use theGET_CONTINUOUS_LOADIDfunction to retrieve a continuous load ID from the continuous load name.B_DELETETYPE: Load this column with theSOFT_DELETEorHARD_DELETEvalue depending on the type of delete that you want to trigger.B_DELETEOPERATION: Delete operation ID. This ID must be a unique value for each deleted record. For example, use a UUID that you convert to a string (e.g.: Using the functionsRAWTOHEX(SYS_GUID())).B_CLASSNAME: Load this column with the name of the entity of the records to delete.<GoldenID>: Load this column with the ID of the golden record to delete.

You can optionally load the B_DELETEAUTHOR and B_DELETEDATE columns to provide an author and a date for the delete operation.

Publishing Master Records Deletion

For matching entities, you can publish master records deletion.

To publish such deletion, use the process described for deleting golden records, with the following differences:

- Load the SD table instead of the SA table.

- Instead of the

<GoldenID>column, load theB_PUBIDcolumn, plus:- the

B_SOURCEIDcolumn for a fuzzy matched entity. - the column representing the attribute defined as the primary key attribute for an ID matched entity.

- the

Load the B_LOADID, B_DELETETYPE, B_DELETEOPERATION, B_CLASSNAME, B_DELETEAUTHOR and B_DELETEDATE columns as explained in Publishing Golden Records Deletion.

When deleting one of the master records contributing to a golden record, the values consolidated in the golden record are updated to take into account this master record deletion.

Managing Duplicates Using SQL

It is possible to manage duplicates using SQL, and perform programmatically actions typically available to users in applications' duplicate managers. The following sections provide the methods to perform various duplicates management operations using SQL with the UM table.

All these methods use a Load ID (in a vLoadID variable), which is initialized and submitted using a process similar to the one used when Publishing Data Using SQL.

Confirming Matches

This method automatically confirms programmatically certain matches. It reproduces the behavior of the user interface.

Columns to load into the UM table:

B_LOADID: Load this column with the Load ID provided by theGET_NEW_LOADIDfunction call, or with the ID of the Continuous Load. Use theGET_CONTINUOUS_LOADIDfunction to retrieve a continuous load ID from the continuous load name.B_SOURCEID,B_PUBID: Load these columns with the ID and the publisher of the master records to confirm.B_CLASSNAME: Load this column with the name of the entity of these records.<GoldenID>: Load this column with the ID of the golden record to which the master record is attached to.B_CONFIRMEDSDPK: Load this column with the same value as <GoldenID>.

INSERT INTO UM_CUSTOMER (

B_LOADID,

B_SOURCEID,

B_PUBID,

B_CLASSNAME,

CUSTOMER_ID,

B_CONFIRMEDSDPK

)

SELECT

vLoad_id,

B_SOURCEID,

B_PUBID,

'Customer',

CUSTOMER_ID,

CUSTOMER_ID

FROM MD_CUSTOMER

WHERE

B_CONFIRMATIONSTATUS = 'NOT_CONFIRMED';Forcing a New Record to Join a Golden Record

When loading a source record, use this method to load at the same time a user decision forcing this record to:

- Either join a golden record with a known ID,

- Or create a new golden record with this ID.

To perform such an operation, combine data loading with the previous technique.

/* Load source record */

INSERT into SD_CUSTOMER (

B_LOADID,

B_SOURCEID,

B_PUBID,

B_CLASSNAME,

-- Data fields are below

CUSTOMER_NAME,

...

) values (

vLoad_id,

123456,

'CRM',

'Customer',

-- Values are below

'GADGETRON',

...

)

/* Force a merge of this source record with a known golden ID */

INSERT INTO UM_CUSTOMER (

B_LOADID,

B_SOURCEID,

B_PUBID,

B_CLASSNAME,

CUSTOMER_ID,

B_CONFIRMEDSDPK

)

SELECT

vLoad_id,

123456,

'CRM',

'Customer',

2, -- Target Golden ID

2 -- Confirm the golden ID

FROM MD_CUSTOMER;

COMMIT;Moving Master and Golden Records to Golden Records

This method automatically moves master records to golden records. It reproduces the behavior of the user interface for moving masters into golden records, or for merging golden records into golden records

Columns to load into the UM table:

B_LOADID: Load this column with the Load ID provided by theGET_NEW_LOADIDfunction call, of with the ID of the Continuous Load.B_SOURCEID,B_PUBID: Load these columns with the ID and the publisher of the master records to move.B_CLASSNAME: Load this column with the name of the entity of these records.<GoldenID>: Load this column with the ID of the golden record to which the master record should be moved to.B_CONFIRMEDSDPK: Set this column to the<GoldenID>value if you want to flag the match as confirmedB_XGRP: Load this column with a UUID value, representing the exclusion group. This UUID value should be same for all the records that belong to the same exclusion group, that is records that previously matched and that should no longer match. The value to load in this column depends on the exclusion groups of the master and golden records:- If neither the master record being moved nor the target golden record is part of an exclusion group, this value should be null.

- If only the target golden record has an exclusion group, this value should be the UUID of this exclusion group.

- If only the master record being moved has an exclusion group, this value should be the UUID of this exclusion group.

- If the master and golden have different exclusion groups, then both these exclusion groups need to be merged in a new exclusion group. This value should this new UUID, generated for example using the SYS_GUID() Oracle function. In addition, all the records from the two original exclusion groups should be loaded into the UM table with this new exclusion group.

INSERT INTO UM_CUSTOMER (

B_LOADID,

B_SOURCEID,

B_PUBID,

B_CLASSNAME,

CUSTOMER_ID,

B_CONFIRMEDSDPK,

B_XGRP

)

SELECT

vLoad_id,

B_SOURCEID,

B_PUBID,

'Customer',

2, -- Target golden ID

2, -- Confirm the golden ID

NULL -- Neither master nor golden are in an exclusion group

FROM MD_CUSTOMER

WHERE

CUSTOMER_ID = 1;Similarly, to split a golden record by moving its master records to new golden records, you do not provide an existing golden ID but seed a new golden ID using the golden ID generation method for the entity.

-- Store a new SYS_GUID value in a raw (16) variable which is used to create a new exclusion group.

select SYS_GUID() into vSysguidXGrp from dual;

-- Split the master records using this new exclusion group.

INSERT INTO UM_CUSTOMER (

B_LOADID,

B_SOURCEID,

B_PUBID,

B_CLASSNAME,

CUSTOMER_ID,

B_CONFIRMEDSDPK,

B_XGRP

)

SELECT

vLoad_id,

B_SOURCEID,

B_PUBID,

'Customer',

SEQ_CUSTOMER.NEXTVAL, -- New golden ID generated by a sequence.

SEQ_CUSTOMER.CURRVAL, -- The same golden ID is used to confirm.

vSysguidXGrp -- New exclusion group ID.

FROM MD_CUSTOMER

WHERE

CUSTOMER_ID = 5;Reset User Decisions

This methods gets rid of user decisions previously taken with duplicate managers, forcing the master records to merge according to the matcher’s decisions.

Columns to load into the UM table:

B_LOADID: Load this column with the Load ID provided by theGET_NEW_LOADIDfunction call, of with the ID of the Continuous Load.B_SOURCEID,B_PUBID: Load these columns with the ID and the publisher of the master records to reconsider for the matching.B_CLASSNAME: Load this column with the name of the entity of these records.

- This operation is not possible in the MDM user interface.

- All user decisions are lost. Exclusion groups and match groups are recomputed for these records.

- All golden IDs are regenerated in the process.

INSERT INTO UM_CUSTOMER (

B_LOADID,

B_SOURCEID,

B_PUBID,

B_CLASSNAME

)

SELECT

vLoad_id,

B_SOURCEID,

B_PUBID,

'Customer'

FROM MD_CUSTOMER;Replaying the Matching

The previous method does not force the match rules to run again. It only removes user decisions. To force the entire matching process to replay, you must reset of the user decisions, as described in the previous method, and reload the data to re-match into the SD table.

-- Remove User Decisions

INSERT INTO UM_CUSTOMER (

B_LOADID,

B_SOURCEID,

B_PUBID,

B_CLASSNAME

)

SELECT

vLoad_id,

B_SOURCEID,

B_PUBID,

'Customer'

FROM MI_CUSTOMER

-- add below a clause to filter the records to re-process.

-- WHERE MI_CUSTOMER.CUSTOMER_ID = ??

;

-- Re-load the data from the latest master values.

INSERT into SD_CUSTOMER (

B_LOADID,

B_PUBID,

B_SOURCEID,

B_CLASSNAME,

-- Data fields are below

CUSTOMER_NAME,

TOTAL_REVENUE

...

)

SELECT

vLoad_id,

B_PUBID,

B_SOURCEID

-- Data fields are below

CUSTOMER_NAME,

TOTAL_REVENUE

...

FROM MI_CUSTOMER

-- add below a clause to filter the records to re-process.

-- WHERE MI_CUSTOMER.CUSTOMER_ID = ??

;

COMMIT;Consuming Data Using SQL

Consuming Data from Semarchy xDM is done in SQL using the tables of the hubs. This chapter covers this type of consumption.

Overview

Consuming the data mainly involves:

- The Golden Data. Contains the enriched, consolidated, validated and certified golden records

- The Master Data. Contains the master records linked to the golden records. Master records contain the references to the source records.

Using the golden data in conjunction with the master data allows cross referencing to source data and re-integrating golden data into source systems.

Consuming Golden Data

Golden Data Table Structure

The complete list of system columns available is provided in the Table Structures section of this guide. The following table lists the system columns used when consuming information from the hub:

| Column Name | Datatype | Description |

|---|---|---|

|

| ID of the batch that created this record. |

|

| Class name of this record. |

|

| Submit date (timestamp) of the batch into which the record was created. |

|

| Submit date (timestamp) of the batch into which the record was updated. |

|

| Submitter of the batch into which the record was created. |

|

| Submitter of the batch into which the record was updated. |

B_CREDATE,B_UPDDATE: These timestamps do not necessarily correspond to the moment when the records were created or updated in a source system or in the hub using a stepper, duplicate manager, etc. They give the moment when the record was submitted to the hub. Note that a record, even unmodified, it considered updated as soon as it is submitted to the hub.B_CREDATOR,B_UPDATOR: For records created or modified using direct authoring or duplicate managers, this corresponds to the user who has performed the changes. For records created or modified in workflows, this corresponds to the user who has submitted the workflow.

Accessing Golden Data Using SQL

To access golden data using SQL, you query on the GD table. The queries are filtered to access data for one or more entity classes,

The following code sample gives a query to access golden records in the GD table.

select G.* (1)

from GD_<Physical_Table_Name> G (2)

where G.B_CLASSNAME in ( <classname_list> ) (3)Explanation of the query:

| 1 | We select all columns from the golden record. You can select specific columns from the GD record. |

| 2 | The GD table accessed is named after the entity. Replace <Physical Table Name> with the physical table name defined for the entity. |

| 3 | Provide the list of entity classes stored in this table you want to access. For example, Person,Company. Note that if you are no using inheritance, only one class is stored in the table and this clause is not required. |

CONTACT_ID, FIRST_NAME and LAST_NAME golden data for the Contact entity.select CONTACT_ID, FIRST_NAME, LAST_NAME

from GD_CONTACT G

where G.B_CLASSNAME = 'Contact'Golden Data Primary Key

The primary key for a golden data table depends on the Entity Type and the ID Generation for the entity. A column named after the Physical Column Name of the primary key attribute stores the golden record primary key for the GD table and has the following datatype:

- For ID Generation - Sequence:

NUMBER(38,0) - For ID Generation - UUID_:

RAW - For ID Generation - Manual, the datatype is the one defined for the primary key attribute.

Consuming Master Data

Accessing master data from fuzzy and ID matched entities uses queries similar to those used to access the

golden data.

Starting from the golden data, we can refer to the master data using the

golden record primary key. The master data table (MD) includes a column

that references this primary key. As the master data also stores the

primary key of the publisher, it is possible to refer back to the source

data from the golden data via the master data.

Depending on the Entity Type (ID Matched, Fuzzy Matched), access to the master data differs:

Accessing Master Data Using SQL (ID Matched Entity)

With ID Matched Entity, the master data table has a structure similar to the

golden data table, and contains in addition the publisher code. The same

primary key is stored in the golden and master data in a column named

after the physical column name of the primary key attribute

(<primary_key_column> in the sample below)

select M.B_SOURCEID, G.*

from MD_<Physical_Table_Name> M

inner join GD_<Physical_Table_Name> G on (

G.<primary_key_column> = M.<primary_key_column>

and G.B_CLASSNAME in ( <classname_list> )

)

where

M.B_CLASSNAME in ( <classname_list> )

and M.B_PUBID = '<publisher_code>'

and M.<primary_key_column> = '<searched_source_id>'In this code, access is filtered with the class name for both the golden data and the master data (lines #5 and #8). The two tables are joined on their common primary key (line #4). In addition, the master data is filtered by source publisher (line #9) and ID of the source record (line #10).

EMPLOYEE_NUMBER=100 in the HR system (ID Matched Entity).select M.EMPLOYEE_NUMBER, G.FIRST_NAME, G.LAST_NAME

from MD_EMPLOYEE M

inner join GD_EMPLOYEE G on (

G.EMPLOYEE_NUMBER = M.EMPLOYEE_NUMBER

and G.B_CLASSNAME = 'Employee'

)

where

M.B_CLASSNAME = 'Employee'

and M.B_PUBID = 'HR'

and M.EMPLOYEE_NUMBER = '100'The resulting information can be used to update the source record with golden data.

Accessing Master Data Using SQL (Fuzzy Matched Entity)

With Fuzzy Matched Entity, the master data table has a structure similar to the golden data table. It contains a reference to the golden data primary key, but the master data primary key consists of two columns:

B_PUBID(VARCHAR2(30 CHAR)) contains the code of the publisher that published this record.B_SOURCEID(VARCHAR2(128 CHAR)) contains the ID of the source record in that publisher

As a consequence, the link between golden and master records is done

using the primary key column as in an ID Matched Entity, but the link to the

source is done using the B_SOURCEID column.

select M.B_SOURCEID, G.*

from MD_<entity> M

inner join GD_<entity> G on (

G. <primary_key_column> = M. <primary_key_column>

and G.B_CLASSNAME in ( <classname_list> )

)

where

M.B_CLASSNAME in ( <classname_list> )

and M.B_PUBID = '<publisher_code>'

and M.B_SOURCEID = '<searched_source_id>'In this code, the golden and master data tables are joined on their

golden record primary key (line #4), but the master data is restricted

by source publisher (line #9) and ID of the source record (line #10),

using the B_SOURCEID column.

27030 in the CRM system (Fuzzy Matched Entity).select M.B_SOURCEID, G.FIRST_NAME, G.LAST_NAME

from MD_CONTACT M

inner join GD_CONTACT G on (

G.CONTACT_ID = M.CONTACT_ID

and G.B_CLASSNAME = 'Contact'

)

where

M.B_CLASSNAME = 'Contact'

and M.B_PUBID = 'CRM'

and M.B_SOURCEID = '27030'Example: Select side by side the duplicates detected for a given source

Contact record with the ID 27030 in the CRM system (Fuzzy

Matched Entity). In this example, the master data table is used twice (aliased

as M and MM) to retrieve the two sides of a duplicate pair.

select

M.B_PUBID DUP1_PUBLISHER, M.B_SOURCEID DUP1_ID, M.FIRST_NAME DUP1_FIRST_NAME, M.LAST_NAME DUP1_LAST_NAME,

MM.B_PUBID DUP2_PUBLISHER, MM.B_SOURCEID DUP2_ID, MM.FIRST_NAME DUP2_FIRST_NAME, MM.LAST_NAME DUP2_LAST_NAME,

G.CONTACT_ID GOLD_ID, G.FIRST_NAME GOLD_FIST_NAME, G.LAST_NAME GOLD_LAST_NAME

from MD_CONTACT M

inner join GD_CONTACT G on

(

G.CONTACT_ID = M.CONTACT_ID

and G.B_CLASSNAME = 'Contact'

)

inner join MD_CONTACT MM on

(

MM.CONTACT_ID = M.CONTACT_ID

and MM.B_CLASSNAME = 'Contact'

)

where

M.B_CLASSNAME = 'Contact'

and M.B_PUBID = 'CRM' /* Publisher ID */

and M.B_SOURCEID = '27030' /* Source ID */

/* and M.B_PUBID = MM.B_PUBID */

/* Uncomment the previous line to restrict the duplicates

to those within the CRM application */Consuming Errors

Accessing Pre-Consolidation Errors Using SQL

Pre-consolidation errors can be accessed via the Source Errors (SE, or AE for basic entities)

tables.

These tables store the error information, that is the

information about the constraints that caused the records to fail the

validation. The latter is stored in the B_CONSTRAINTNAME (name of the

constraint) and B_CONSTRAINTTYPE (type of the constraint) columns.

SE tables do not store the erroneous data itself, but they contain the identifiers to the source (SD or SA for basic entities) records in error.

Note that the B_ERROR_STATUS column on the SD table provides a simple way to detect the records with errors.

Example: Select the source errors for the Contact entity.

In this example, incorrect foreign references would appear. To identify

them, we retrieve the incorrect referenced IDs from FP_CUSTOMER and

FS_CUSTOMER.

select * from SD_CONTACT where B_ERROR_STATUS = 'ERROR';select SE.B_BATCHID, SD.B_LOADID,

SE.B_CONSTRAINTNAME, SE.B_CONSTRAINTTYPE,

SE.B_PUBID, SE.B_SOURCEID,

SD.FIRST_NAME, SD.LAST_NAME,

SD.FP_CUSTOMER, SD.FS_CUSTOMER

from SE_CONTACT SE, SD_CONTACT SD

where

SE.B_CLASSNAME = 'Contact'

and SD.B_CLASSNAME = 'Contact'

and SD.B_PUBID = SE.B_PUBID

and SD.B_SOURCEID = SE.B_SOURCEID

and SD.B_LOADID = SE.B_LOADID

and SD.B_ERROR_STATUS = 'ERROR';Accessing Post-Consolidation Errors Using SQL

Post-consolidation errors can also be accessed via the Golden Errors (GE) tables.

These tables store the error information, that is the

information about the constraints that caused the records to fail the

validation. The latter is stored in the B_CONSTRAINTNAME (name of the

constraint) and B_CONSTRAINTTYPE (type of the constraint) columns.

GE tables do not store the erroneous data itself, but they contain the identifier to the golden (GD) records in error.

Note that the B_ERROR_STATUS column on the GD table provides a simple way to detect the records with errors.

Example: Select the errors and data for the golden contacts in error.

select * from GD_CONTACT where B_ERROR_STATUS = 'ERROR';select GE.B_BATCHID,

GE.B_CONSTRAINTNAME, GE.B_CONSTRAINTTYPE,

GE.CONTACT_ID,

GD.FIRST_NAME, GD.LAST_NAME, GD.F_CUSTOMER

from GE_CONTACT GE, GD_CONTACT GD

where

GE.B_CLASSNAME = 'Contact'

and GD.B_CLASSNAME = 'Contact'

and GD.CONTACT_ID = GE.CONTACT_ID

and GD.B_BATCHID = GE.B_BATCHID

and GD.B_ERROR_STATUS = 'ERROR';REST API Overview

The REST API provides programmatic access to read data in Semarchy xDM.

Overview

The REST API is available at the following base URL (referred to as [base_url] in this section):

http://<host>:<port>/{wars-base-name}/api/rest/Each operation in the REST API is available with a parameterized URL under this base URL. The URL may also take additional parameters.

$baseExprs is a valid parameter, but $baseexprs or $BASEXPRS are invalid and will be ignored.REST API Documentation

The REST API exposes its built-in documentation as a link in the Welcome page. In addition, this documentation is available for tools as an OpenAPI specification.

To Access the REST API Documentation:

- Log in to Semarchy xDM as a user with one of the Application Design, Application Management and Platform Administration platform privileges.

- Click the REST API link on the Welcome page.

The documentation exposes the endpoint description and provides request and response samples.

The main operations available in the REST API are also described in the following sections.

Authentication & Security

This section describes the authentication mechanisms for the REST API and provides simple recommendations to secure the use of this API.

Authentication & Privileges

Applications accessing Semarchy xDM using the REST API must be authenticated.

The REST API supports two authentication methods:

- Basic authentication, where you provide an

AuthorizationHTTP header containingusername:passwordencoded in base64. With this authentication method, REST API calls use the identity, roles, and privileges of the user specified with the username. - API Key authentication, where you provide an

API-KeyHTTP header containing an API key created in Semarchy Configuration. With this authentication method, REST API calls use the identity (Default User Name) and roles defined for the API Key.

Operations in the REST API need privileges similar to those required to perform the same operations via a user interface: