| This is documentation for Semarchy xDI 2023.3, which is no longer supported. For more information, see our Global Support and Maintenance Policy. |

Getting Started with Snowflake

This getting started gives some clues to start working with Snowflake

Connect to your Data

The database structure can be entirely reversed in metadata and then used in mappings and processes to design and adapt the business rules to meet the user’s requirements.

You can refer to Connect to your Data page which explains the procedure.

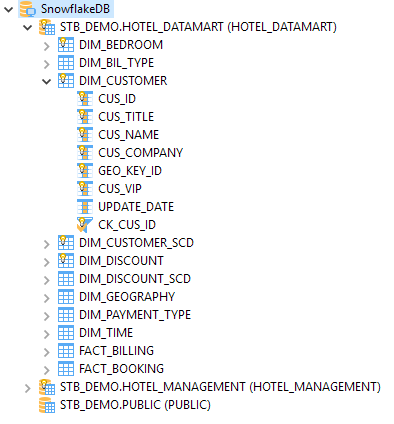

Below is an example of reversed Snowflake metadata.

Temporary Storage

Overview

To optimize data loading into Snowflake, Semarchy xDI uses a temporary storage location to store temporary files before loading them into Snowflake.

When necessary, source data is first extracted to temporary files which are sent into this temporary storage location, before being loaded into Snowflake using the dedicated Snowflake loaders.

Currently, Semarchy xDI supports the following storage locations:

-

Internal Snowflake Stage

-

External Storage

-

Microsoft Azure Storage

-

Amazon S3

-

Google Cloud

-

Storage definition

The storage information is defined in the Snowflake metadata.

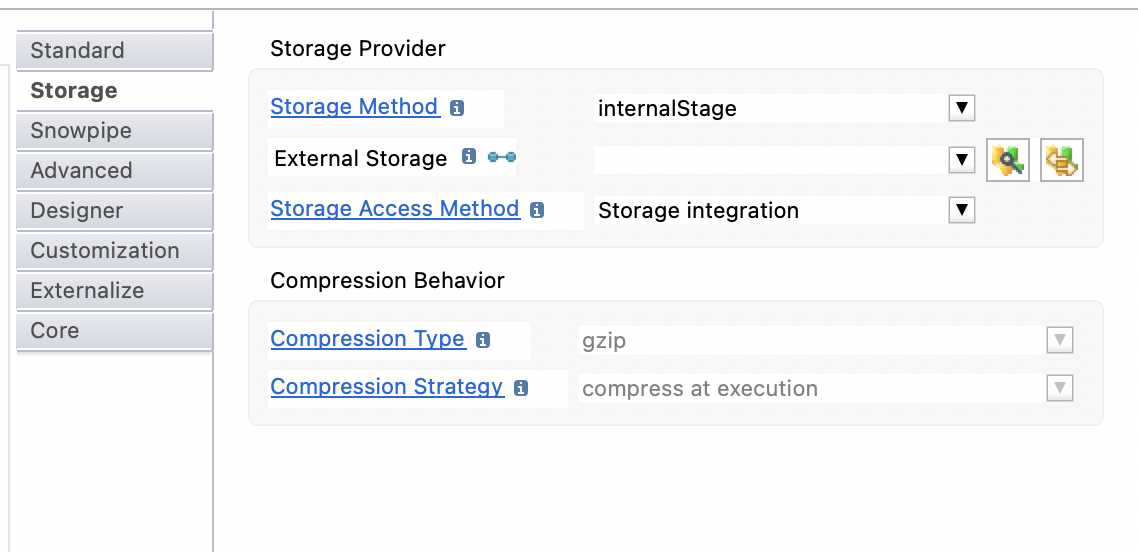

On the Snowflake metadata server node:

-

Select the Storage tab.

-

Define the Storage Method property.

-

Define the External Storage property if you are using external storage.

-

(Optional) Change the Compression Behavior.

The following properties are available:

| Property | Description | ||

|---|---|---|---|

Storage Method |

Storage method used as default in mappings loading data into Snowflake.

|

||

External Storage |

Metadata link of the external storage container to be used when using external storage method. Choose in the list the external storage, or drag and drop it inside the property.

|

||

Storage Access Method |

Defines the method that should be used to access the storage. Possible options are:

When this parameter is empty, it uses the value of the parent. On the server node, as there is no parent, it uses the Credentials mode as default, for compatibility purposes. |

||

Storage Integration |

Only available when Storage Access Method is set to Storage Integration. Free text field that allows defining the name of a snowflake integration storage. |

||

Compression Type |

Defines the compression type used when loading files (source files or temporarily generated files) into this database. |

||

Compression Strategy |

Defines the compression behavior when loading files (source files or temporarily generated files) into this database.

|

|

Those attributes can be overridden per schema and per table: for this, go on the desired schema or table node, then go inside the 'Storage' tab where you’ll find the same attributes, which will override the value set on the parent nodes. |

| 'External Storage' is not linked to the 'Storage Method'. You can decide to use 'internalStage' storage method but provide an External Storage link for specific cases where you’ll override the default storage method. |

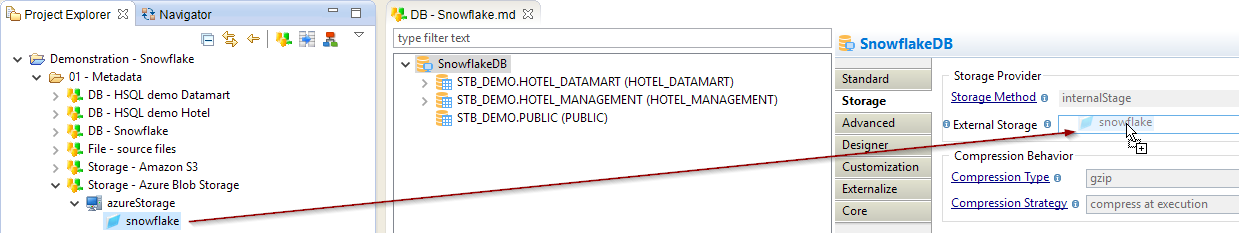

Definition of an external storage

As indicated in the above properties documentation, you can define the external storage location by selecting the related location, or by dragging and dropping it directly on the property.

To define an external storage, the prerequisite is to have the corresponding metadata existing in your workspace.

Example through a drag and drop:

| Refer to Getting Started with Microsoft Azure Blob Storage and Amazon S3 to learn how to create this metadata. |

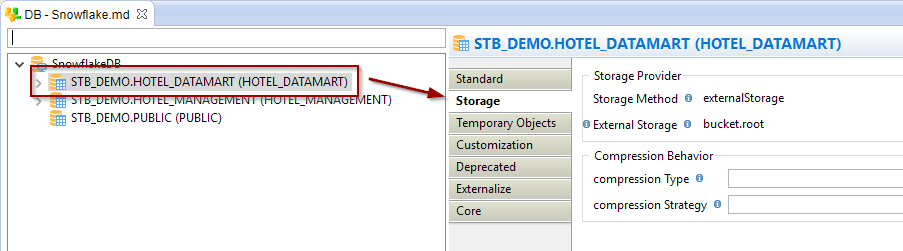

Override of storage configuration

The storage configuration that is defined on the server node can be overridden on schema, on table, and directly in mappings.

This offers the ability to have a common default behavior and override it when it is required for some specific developments or use cases.

Example of an override on a schema node:

Example of an override in a mapping:

Snowpipe

Snowpipe is a solution that allows ingesting data files from Snowflake stages directly into Snowflake tables without having to launch the warehouse. This allows reducing the cost of running an entire warehouse.

These tools use the parameters from the Snowpipe finger tab:

| Parameter | Description |

|---|---|

Account URL |

Account URL that should be used to make Snowpipe API calls. For example: https://acme-test_aws_us_east_2.snowflakecomputing.com |

Snowpipe User |

User who has the rights to send the API requests to ingestFiles. If no user is provided, the user for JDBC connection is used. |

Private Key Path |

Path to the private key generated. Refer to Generate the Private Key for more information. |

Private Key Password |

Password to decrypt the private key if it is encrypted. |

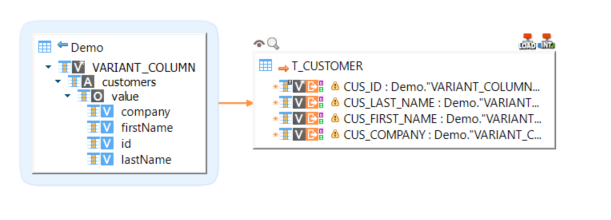

VARIANT Data Type

Overview

The VARIANT data type allows storing structured data such as JSON, AVRO, or XML.

Semarchy xDI supports reading and writing data inside columns with this data type.

Read data in a VARIANT

To read and manipulate structured data contained in a VARIANT column, use a Deserializer. See Deserialize Source Data.

Write data in a VARIANT

In a Mapping, map any source data to the target Snowflake VARIANT column. Depending on the source data type, you may need to use Snowflake’s conversion functions to convert the source data to a format matching Snowflake’s VARIANT data type. See Create Mappings.

Additionally, Semarchy xDI also provides a feature to seamlessly write JSON or XML data in a VARIANT column. To do this:

-

Create a JSON or XML Metadata:

-

Define the expected data structure. See Metadata and Reverse Engineering.

-

Right-click the JSON or XML node representing the data structure add choose New > Property Field.

-

Give a Name to the property field and select stringContent in the Property attribute. See JSON Property Fields and XML Property Fields

-

-

Create a mapping:

-

Add the JSON or XML data structure as the source.

-

Add the Snowflake table as the target.

-

Map the source stringContent property to the target Snowflake VARIANT column.

In addition to mapping the stringContent property to the VARIANT column, you can also map any of the JSON or XML fields to the other columns of the Snowflake table.

-

-

Run the Mapping.

The source data is written in the target Snowflake table.

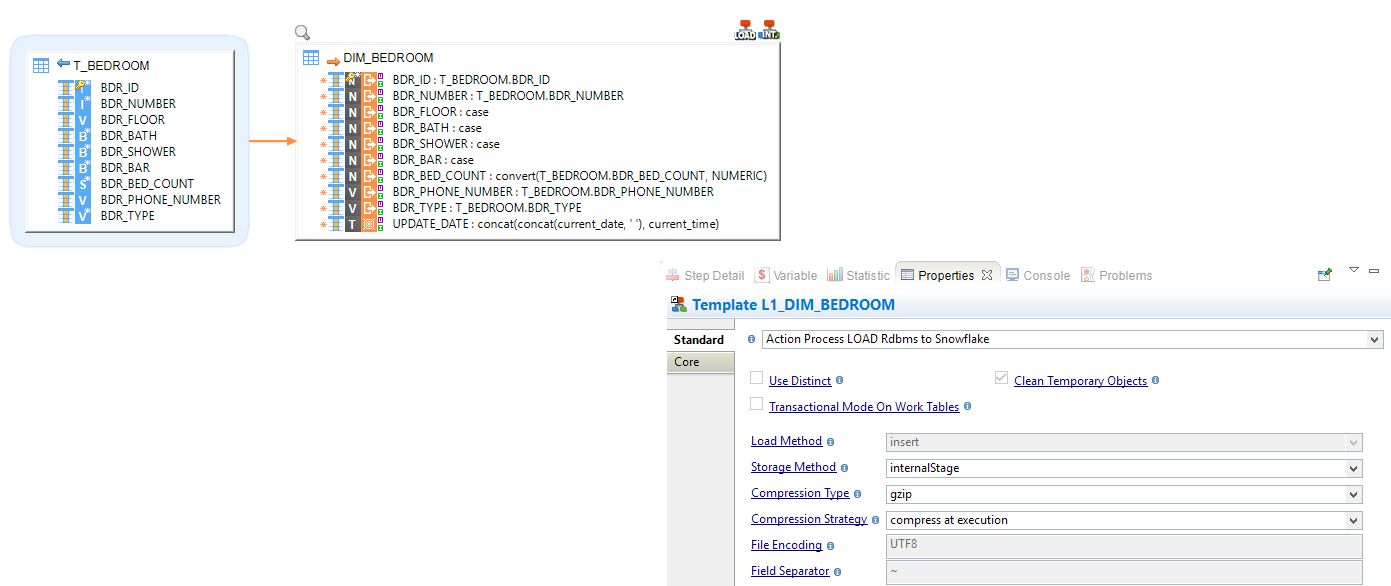

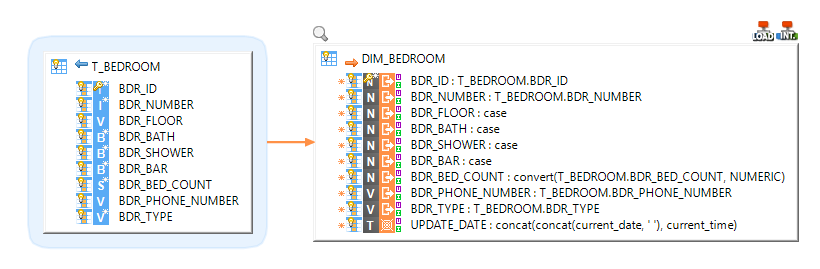

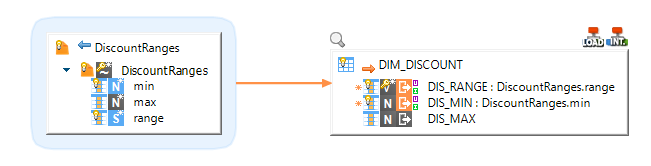

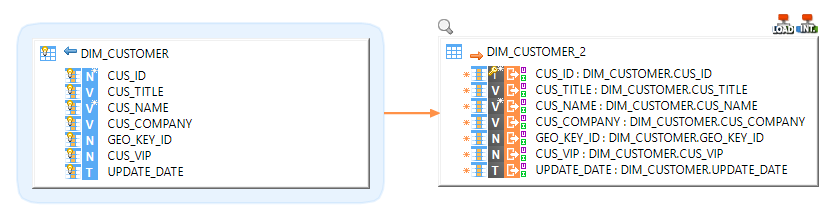

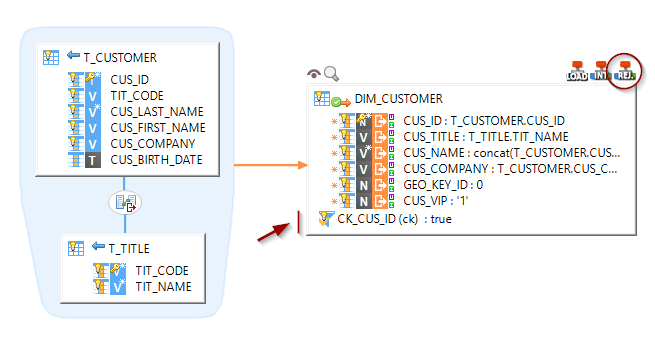

Create your first mappings

Your metadata is ready and your tables reverse engineered, you can now create your first mappings.

The Snowflake technology can be used like any other database in Semarchy xDI.

Drag and drop your sources and targets, map the columns as usual, and configure the templates accordingly to your requirements.

Loading data from a database into Snowflake

Loading data from a Delimited File into Snowflake

Loading data from Snowflake into another database

Performing Reject detection while loading a Snowflake Table

Loading structured data contained in a VARIANT field into another database

| The warnings on the target fields are displayed because of the hierarchical structure that is currently unknown by the expression parser. They can be ignored. |

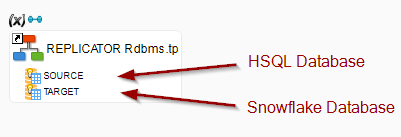

Replicating a source database into Snowflake

Sample Project

The Snowflake Component ships sample project(s) that contain various examples and use cases.

You can have a look at these projects to find samples and examples describing how to use it.

Refer to Install Components to learn how to import sample projects.