| This is documentation for Semarchy xDM 2023.2, which is no longer supported. For more information, see our Global Support and Maintenance Policy. |

Configure Semarchy xDM for high availability

Semarchy xDM can be configured to support enterprise-scale deployment and high-availability.

Semarchy xDM supports the clustered deployment of the Semarchy xDM web application for high availability and failover. For example, a clustered deployment can be set up to support a large number of concurrent users performing data access and authoring operations.

Reference architecture for high availability

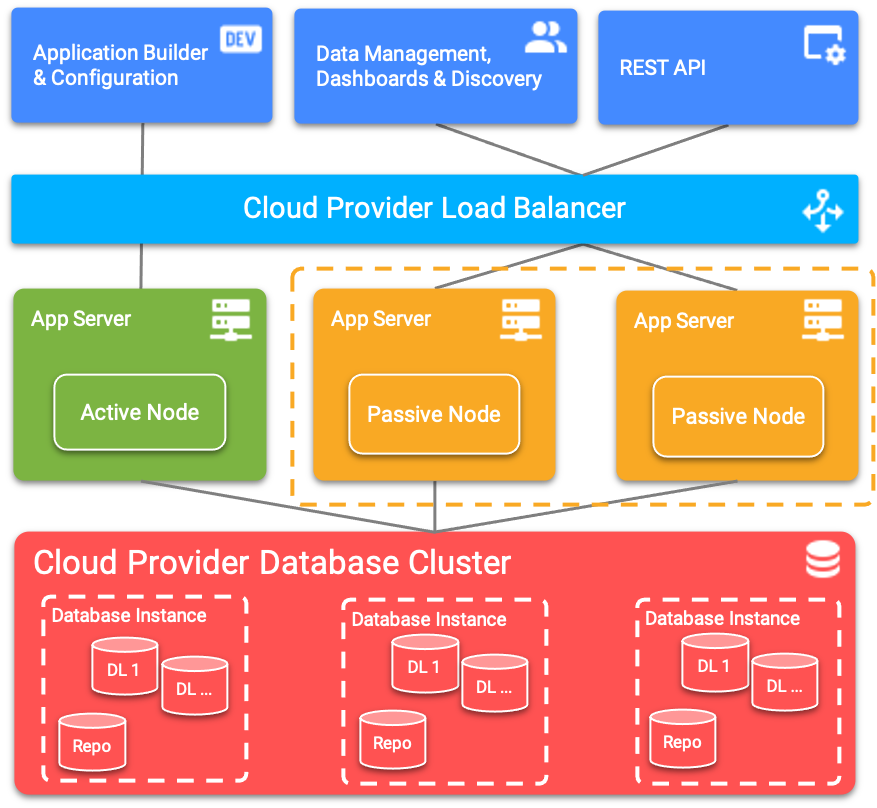

In a clustered deployment, only one node of the Semarchy xDM application manages and runs certification processes. This node is the active node. A set of clustered Semarchy xDM applications serves users accessing the user interfaces (i.e., the Application Builder, Dashboard Builder, xDM Discovery, and Configuration modules, or MDM applications) as well as applications accessing data locations via integration points. These are passive nodes. The back-end databases hosting the repository and data locations are deployed in a database cluster, and an HTTP load balancer is used as a front-end for users and applications.

The reference architecture for such a configuration is described in the following figure:

This architecture is composed of the following components:

-

HTTP load balancer: this component manages the sessions coming from within the enterprise network or from the Internet (typically via a Firewall). This component may be a dedicated hardware load balancer or a software solution, which distributes the incoming sessions on the passive nodes running in the JEE application server cluster.

-

A JEE application server cluster and passive Semarchy xDM platforms: a Semarchy xDM application instance is deployed on each node of this cluster, which is scaled to manage the volume of incoming requests. In the case of a node failure, the other nodes remain available to serve the sessions. The Semarchy xDM applications deployed in the cluster are passive nodes. Such a node provides access to the Semarchy user interfaces and integration endpoints but is unable to manage batches and jobs.

-

A JEE server and an active Semarchy xDM platform: this single JEE server hosts the only complete Semarchy xDM platform of the architecture. This active node is not accessible to users or applications. Its sole purpose is to poll the submitted batches and process jobs. The active node is not necessarily part of the same cluster containing the passive nodes.

-

A database cluster: this component hosts the Semarchy xDM repository and the data locations databases and schemas in a clustered environment. Both active and passive nodes of the Semarchy xDM platform connect to this cluster using platform datasources.

In this architecture:

-

Design-time or administrative operations are processed by the active node in the JEE application server cluster.

-

Operations performed on the data hubs (data access, steppers, or external loads) are processed by the passive nodes, but the resulting batches and jobs are always processed by the active node.

-

Identity providers can be configured separately for each node.

| Only one Active Node must be configured. Multiple active nodes are not supported. |

| For Passive Nodes, built-in clustering capabilities offered by application servers such as Apache Tomcat Clustering are not supported. |

| The xDM Dashboard and xDM Discovery components operate the same way on both active and passive nodes (e.g., Discovery profiling processes can run even on passive nodes). |

Load balancing

Load balancing ensures optimal usage of the resources for a large number of users and applications simultaneously accessing Semarchy xDM.

Load balancing is performed at two levels:

-

The HTTP load balancer distributes the incoming requests on the nodes of the JEE application server cluster.

-

The JDBC datasource configuration distributes database access to the repository and the data locations on the database cluster nodes. In PostgreSQL and SQL Server environments, use the datasource configuration to enable load balancing on the multiple nodes of the cluster.

Clustered mode

Semarchy xDM operates in clustered mode by default, allowing nodes to automatically retrieve configuration changes and model deployments.

The following changes are automatically applied to all nodes within the cluster:

-

Model deployments in data locations, which automatically refresh MDM applications and the REST API.

-

Changes to logging configuration.

-

Deployment of new plugin or updates to existing plugins.

-

Updates to custom translations.

| The engine, batch poller, purge schedule, and continuous load configurations are not affected by this clustering mechanism that synchronizes changes across all nodes in the cluster, since they run and can be configured only on the active node. |

Failure and recovery

In the reference architecture, failover is managed for both user and application sessions.

The following table describes the behavior and the required recovery actions in case of a failure in the various points of the architecture.

| Failure type | Behavior and required actions | ||

|---|---|---|---|

Database failure |

In the event of a database cluster node failure, other nodes can recover and process the incoming database requests. |

||

Passive node failure |

If one of the nodes of the JEE application server cluster fails:

The only information not recovered is the content of the unsaved editors for the user sessions. All the other content is saved in the repository or the data locations. Transactions attached to steppers, for example, are saved in the data locations and not lost. |

||

Active node failure |

The purpose of the active node is to process batches and jobs.

The active node must be restarted automatically or manually to fully recover from a failure. When it is restarted, the platform resumes its normal course of activity with no user action required.

|

|

Cloud-based disaster recovery planning recommendations

To ensure minimal downtime and data loss in the event of an unforeseen disaster, cloud architects should consider the following recommendations:

|

Configure Semarchy xDM for high availability

Active vs. passive nodes

The Semarchy xDM server comes in two flavors corresponding to two web application archive (WAR) files:

-

The active node (

semarchy.war) includes the active application to deploy on the single active node. This WAR file includes the batch poller and the engine and can trigger and process the submitted batches. -

The passive node (

semarchy-passive.war) includes the passive application to deploy on all the passive nodes of the cluster. This WAR file does not include the batch poller and engine services. It is unable to trigger or process submitted batches.

Both these files are in the semarchy-mdm-install-<version tag>.zip archive file, in the mdm-server folder.

Install and configure Semarchy xDM

The overall installation process for a high-availability configuration is similar to the general installation process:

-

Create the repository and data location databases and schemas in the database cluster.

-

Configure the application server security for both the cluster and the active node.

-

Configure the datasources for the nodes and cluster.

For more information about configuring RAC JDBC datasources, see your Oracle database and application server documentation. If you are using PostgreSQL or SQL Server, refer to the JDBC driver documentation to configure the datasource for high availability and load balancing. -

Deploy the applications:

-

Deploy the active node.

The architecture only supports one active node and there is no need to load balance it. The active node can be deployed in thesemarchycontext using thesemarchy.warfile.

The active node is available via thehttps://active-host:active-host-port/URL.semarchy/ -

Deploy multiple passive nodes behind the load balancer.

You can deploy the passive nodes using either the same context as the active node or a different context.-

Deploying with the same context: when creating a passive node using

semarchy-passive.war, rename this file tosemarchy.warbefore deployment. This keeps the same deployment name (semarchy) for the active and the passive nodes and usually simplifies load balancing configuration.

In this configuration, the passive nodes are available behind the load balancer via thehttps://load-balancer-host:load-balancer-port/URL.semarchy/ -

Deploying with a different context: when creating a passive node using

semarchy-passive.war, keep thesemarchy-passive.warfile name.

In this configuration, the passive nodes are available behind the load balancer via thehttps://load-balancer-host:load-balancer-port/URL.semarchy-passive/

-

-

| When deploying multiple nodes, make sure to use the same startup configuration for all the nodes as they all connect to the same repository. |

Configure the HTTP load balancer

Semarchy xDM requires that you configure HTTP load balancing with sticky sessions (also known as session persistence or session affinity). In this mode, requests from existing sessions are consistently routed to the same server. This is mandatory for the Semarchy xDM user interfaces, but not for integration points. For example, for Amazon Web Services (AWS) deployments, sticky sessions are configured in the load balancer.