| This is documentation for Semarchy xDI 2023.1, which is no longer supported. For more information, see our Global Support and Maintenance Policy. |

Getting Started with Google BigQuery

Prerequisites

Google Cloud Project Metadata

You must create a Google Cloud Project Metadata, which contains the account and credentials to connect to Google BigQuery.

This is mandatory, Google BigQuery Metadata will use it to gather the account and credentials.

Google Cloud Storage Metadata

Google Cloud Storage is used as a temporary location for temporary files to optimize data loading on Google BigQuery.

It is recommended to create a Google Cloud Storage Metadata, to define this temporary location.

Connect to your data

Create the Metadata

To create a Google BigQuery Metadata, launch the Metadata creation wizard, select the Google BigQuery Metadata in the list and follow the wizard.

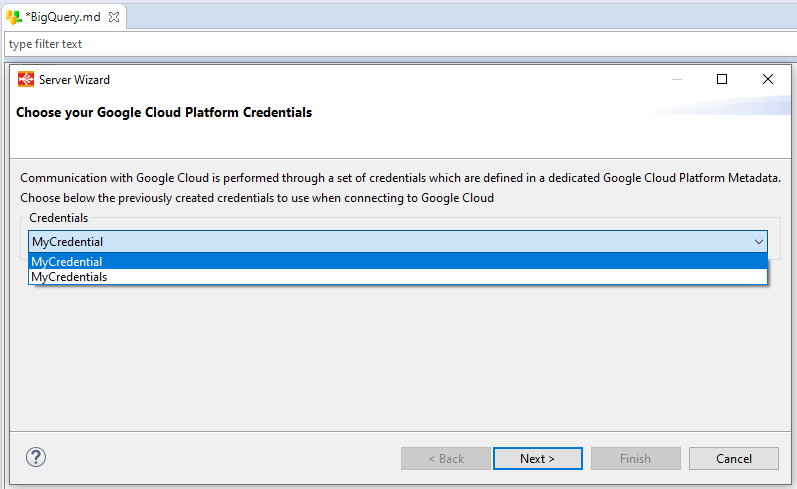

The wizard will ask you to choose the credentials to use, with a list of all credentials defined in your workspace. If the list is empty, make sure the prerequisites have been set up correctly.

Select the credentials, and click next.

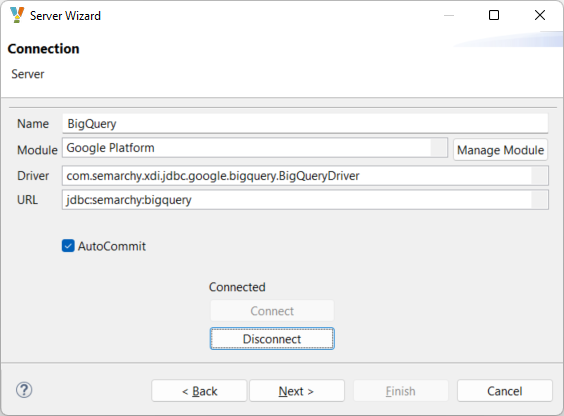

You can customize the URL with optional parameters. Aftewards, click Connect.

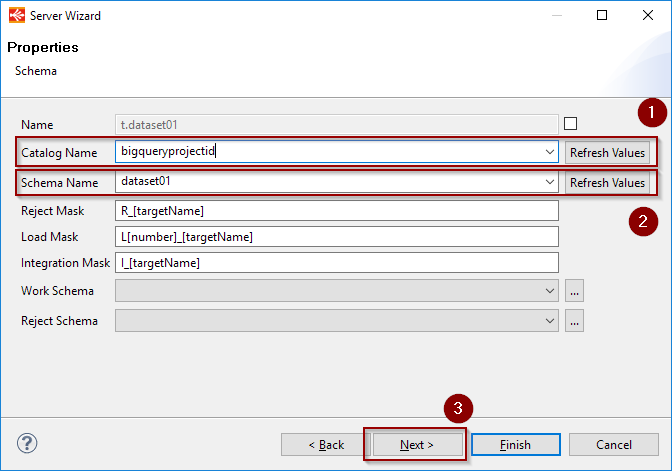

On the next page, click Refresh Values on the Catalog Name, then select the Google Project from the list.

Click Refresh Values onto the Schema Name and select the Google BigQuery dataset to reverse-engineer from the list.

Click next, click Refresh to list the tables and select the ones to reverse-engineer.

Finally, click the Finish button. The tables will be reverse-engineered in the Metadata.

JDBC parameters

The JDBC URL to Google BigQuery supports optional parameters in standard URL query string syntax, like in the following example:

jdbc:semarchy:bigquery?backendErrorRetryNumber=2&backendErrorRetryDelay=1000The following parameters are supported:

| Parameter | Description |

|---|---|

backendErrorRetryNumber |

The number of times to retry a query when a An undefined parameter is equivalent to no retries. |

backendErrorRetryDelay |

Delay in milliseconds to wait between each retry. |

internalErrorRetryNumber |

The number of times to retry a query when an An undefined parameter is equivalent to no retries. |

internalErrorRetryDelay |

Delay in milliseconds to wait between each retry. |

tableUnavailableErrorRetryNumber |

The number of times to retry a query when a An undefined parameter is equivalent to no retries. |

TableUnavailableErrorRetryDelay |

Delay in milliseconds to wait between each retry. |

quotaExceededErrorRetryNumber |

The number of times to retry a query when a An undefined parameter is equivalent to no retries. |

quotaExceededErrorRetryDelay |

Delay in milliseconds to wait between each retry. |

jobsLocation |

The location used for job operations. For example |

You can also add the parameters as standalone properties under the top-level BigQuery metadata node.

Define the Cloud Storage link

For performance purposes, Semarchy xDI is using Cloud Storage to optimize the data loading on Google BigQuery

Drag-and-drop or select your previously created Google Cloud Storage Metadata inside the related property.

Choose a bucket or a folder, depending on your preferred organization.

| This bucker/folder will be used as the temporary location when necessary, to optimize data loading into Google BigQuery. |

Create your first Mappings

Below are some examples of Google BigQuery usages in Mappings and Processes.

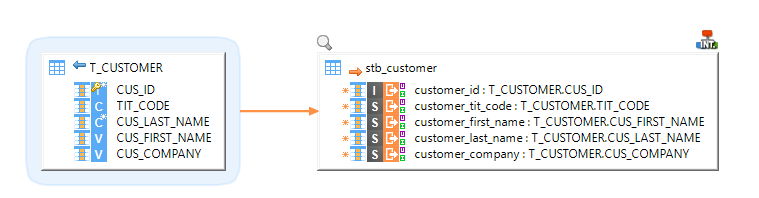

Example of Mapping loading data from an HSQL database to a Google BigQuery table

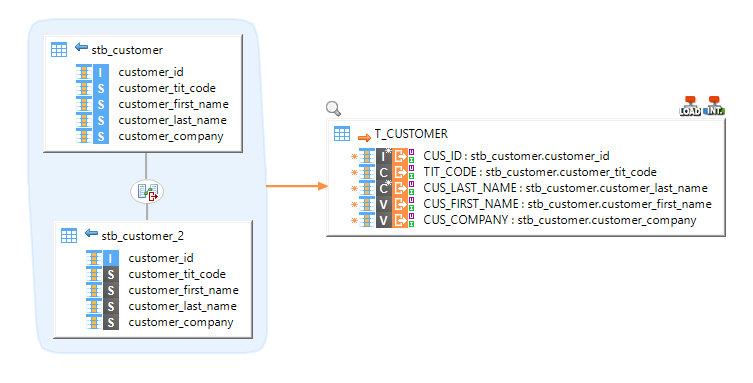

Example of Mapping loading data from multiple BigQuery tables with joins to an HSQL table

Cloud Storage Mode

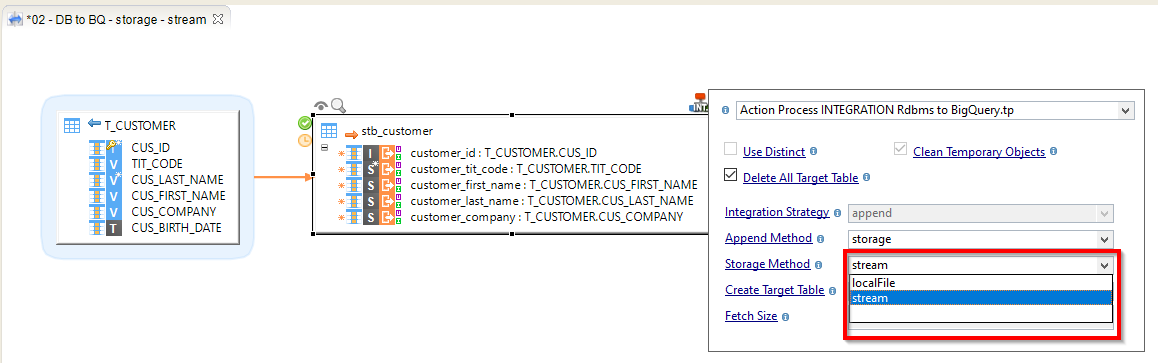

When integrating data into Google BigQuery, data may be going through Google Cloud Storage for performance purposes.

Depending on the amount of data sent and network quality, for instance, different methods are available in Templates to have better performances.:

-

stream: Data is streamed directly in the Google Storage Bucket.

-

localfile: Data is first exported to a local temporary file, which is then sent to the defined Google Storage Bucket. This method should be preferred for large sets of data.

The storage method is defined on the Template:

Sample Project

The Google BigQuery Component ships sample project(s) that contain various examples and use cases.

You can have a look at these projects to find samples and examples describing how to use it.

Refer to Install Components to learn how to import sample projects.