| This is documentation for Semarchy xDI 2023.1, which is no longer supported. For more information, see our Global Support and Maintenance Policy. |

Getting Started with Sqoop

Overview

This getting started gives some clues to start working with Sqoop in Semarchy xDI

Sqoop is a tool used to transfer data between HDFS and relational databases having a JDBC driver.

Semarchy xDI provide tools for both Sqoop 1 and Sqoop 2 versions, which are different in term of usage.

Prerequistes

Sqoop is using JDBC drivers to transfer data.

Therefore, the JDBC driver corresponding to the technology you want to import or export data from must be installed on the Sqoop server.

Sqoop Metadata

Create the Metadata

Create first the Sqoop Metadata, as usual, by selecting the Sqoop technology in the Metadata Creation Wizard.

Choose a name for this Metadata and go to next step.

Configure the Metadata

Overview

Your freshly created Sqoop Metadata being ready, you can now start configuring the server properties, which will define how to work with Sqoop.

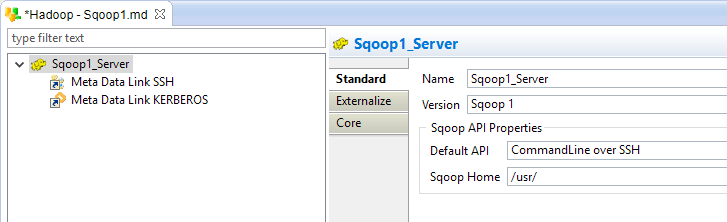

Below is an example of a fully configured Sqoop Metadata.

The available properties are different depending on the Sqoop Version selected.

Common Properties

The following common properties are available:

Property |

Description |

Name |

Logical label (alias) for the server |

Version |

Sqoop version to be used:

|

Sqoop 1 Properties

Property |

Description |

Examples |

Default API |

The API to use by default with the Sqoop 1 version:

|

CommandLine |

Sqoop Home |

Directory where the Sqoop commands are installed. |

E.g. If the sqoop-import and sqoop-export utilities are under /usr/bin/, the value should be the following: |

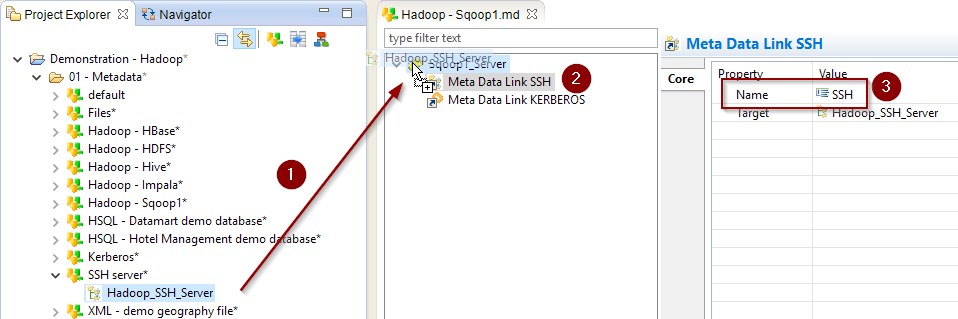

If you are using the CommandLine over SSH API, you must drag and drop a SSH Metadata Link containing the SSH connection information in the Sqoop Metadata.

Rename it to 'SSH'.

Sqoop 2 Properties

Property |

Description |

Examples |

Default API |

The default API to use with the Sqoop 2 version: * REST: The Sqoop2 REST API is used to perform the operations. |

REST |

URL |

Sqoop 2 REST API base URL |

|

Hadoop Configuration Directory |

Path to a directory containing Hadoop Configuration Files on the remote server, such as core-site.xml, hdfs-site.xml, … |

/home/cloudera/stambia/conf |

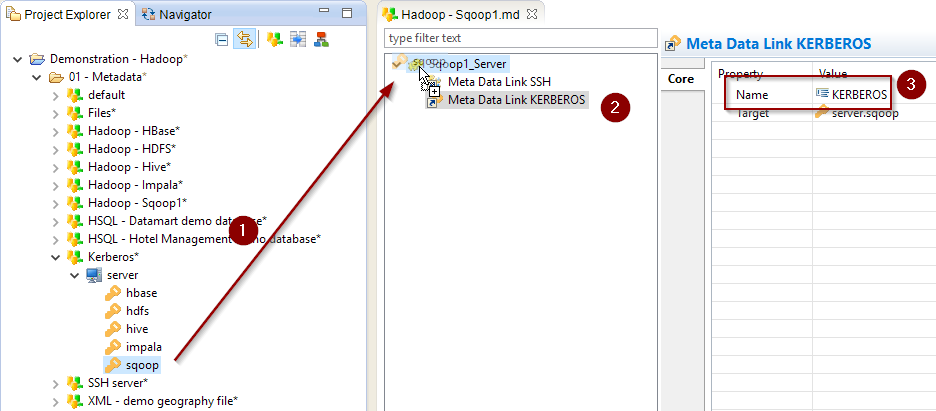

Configure the Kerberos Security (optional)

When working with Kerberos secured Hadoop clusters, connections will be protected, and you’ll therefore need to specify in Semarchy xDI the credentials and necessary information to perform the Kerberos connection.

A Kerberos Metadata is available to specify everything required.

-

Create a new Kerberos Metadata (or use an existing one)

-

Define inside the Kerberos Principal to use for Sqoop

-

Drag and drop it in the Sqoop Metadata

-

Rename the Metadata Link to 'KERBEROS'

Below, an example of those steps:

| Kerberos is only supported for the Sqoop 1 version |

| Refer to Getting Started With Kerberos for more information. |

Sqoop usage

Sqoop 1

Semarchy xDI provides two Process Tools to work with Sqoop 1:

Process Tool |

Description |

TOOL Sqoop Export |

Export data from HDFS to any database having a JDBC driver. |

TOOL Sqoop Import |

Import data from any database having a JDBC driver to HDFS. |

To use these Process Tools:

-

Create a Process

-

From the Process Palette, add the desired Sqoop Process Tool.

-

Drag and drop the Sqoop Metadata on it

-

Drag and drop the HDFS Folder Metadata Link from which you want to import or export data on it

-

Drag and drop the Database Table Metadata Link from which you want to import or export data on it

| For further information, consult the Process Tools parameters description. |

Sqoop 2

Sqoop 2 has the same goal as Sqoop 1 but its usage differs.

Please refer to the Sqoop 2 documentation to understand it’s concepts and usage.

Semarchy xDI provides various Process Tools to work with Sqoop 2:

Tool |

Description |

TOOL Sqoop2 Describe Connectors |

Retrieve information about the Sqoop 2 driver and the available connectors. |

TOOL Sqoop2 Create Link |

Create a Sqoop 2 Link. |

TOOL Sqoop2 Monitor Link |

Monitor Sqoop 2 Links (enable, disable, delete). |

TOOL Sqoop2 Create Job |

Create a Sqoop 2 Job. |

TOOL Sqoop2 Set Job FROM HDFS |

Generate the HDFS From part of a Job. |

TOOL Sqoop2 Set Job FROM JDBC |

Generate the JDBC From part of a Job. |

TOOL Sqoop2 Set Job TO HDFS |

Generate the HDFS To part of a Job. |

TOOL Sqoop2 Set Job TO JDBC |

Generate the JDBC To part of a Job. |

TOOL Sqoop2 Monitor Job |

Monitor Sqoop 2 Jobs (enable, disable, delete, start, stop, status). |

To use these Process Tools:

-

Create a Process

-

From the Process Palette, add the desired Sqoop Process Tool.

-

Define their parameters and Metadata Link accordingly to your requirements

| For further information, consult the Process Tools parameters description. |

Sample Project

The Hadoop Component ships sample project(s) that contain various examples and use cases.

You can have a look at these projects to find samples and examples describing how to use it.

Refer to Install Components to learn how to import sample projects.